Get Appointment

- contact@wellinor.com

- +(123)-456-7890

Blog & Insights

- Home

- Blog & Insights

Keyva CTO Anuj Tuli discusses our expertise in developing point-to-point integrations.

[post_title] => CTO Talks: Integrations [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => cto-talks-integrations [to_ping] => [pinged] => [post_modified] => 2024-05-15 19:46:01 [post_modified_gmt] => 2024-05-15 19:46:01 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=3788 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [1] => WP_Post Object ( [ID] => 3965 [post_author] => 7 [post_date] => 2023-07-17 07:11:00 [post_date_gmt] => 2023-07-17 07:11:00 [post_content] =>This article explores the process of utilizing Infrastructure as Code with Terraform to provision Azure Resources for creating storage and managing Terraform Remote State based on the environment.

Terraform State

Terraform state refers to the information and metadata that Terraform uses to manage your infrastructure. It includes details about the resources created, their configurations, dependencies, and relationships.

Remote state storage enhances security by preventing sensitive information from being stored locally and allows for controlled access to the state. It enables state locking and preventing conflicts. It simplifies recovery and auditing by acting as a single source of truth and maintaining a historical record of changes.

Azure Storage

Azure Storage is a highly scalable and durable solution for storing various types of data. To store Terraform state in Azure Storage, we will be utilizing the Azure Blob Storage backend. Azure Blob Storage is a component of Azure Storage that provides a scalable and cost-effective solution for storing large amounts of unstructured data, such as documents, images, videos, and log files.

Note: This article assumes you have Linux and Terraform experience.

Prerequisites

- Terraform Installed

- Azure Account

- Azure CLI

Code

You can find the GitHub Repo here.

Brief Overview of the Directory Structure

/terraform-aks/

├── modules

│ ├── 0-remotestate

│

└── tf

└── dev

|

├── global

│ └── 0-remotestate

- /terraform-aks: This is the top-level directory.

- /modules: Within this directory, we store the child modules that will be invoked by the root modules located in /tf/.

- /0-remotestate: This sub-directory, found within the /modules/ directory, contains the necessary resources for creating storage used to store our remote state.

- /tf: The configurations for all root modules are located in this directory.

- /dev: Representing the environment, this directory contains configurations specific to the dev environment.

- /global: This subdirectory houses configurations that are shared across different regions.

- /0-remotestate: Located within the /global/ directory, this subdirectory represents the root module responsible for calling the child module located in /modules/0-remotestate/ in order to create our storage.

Usage

To use the module, modify the main.tf at the root level and child module at main.tf based on your criteria.

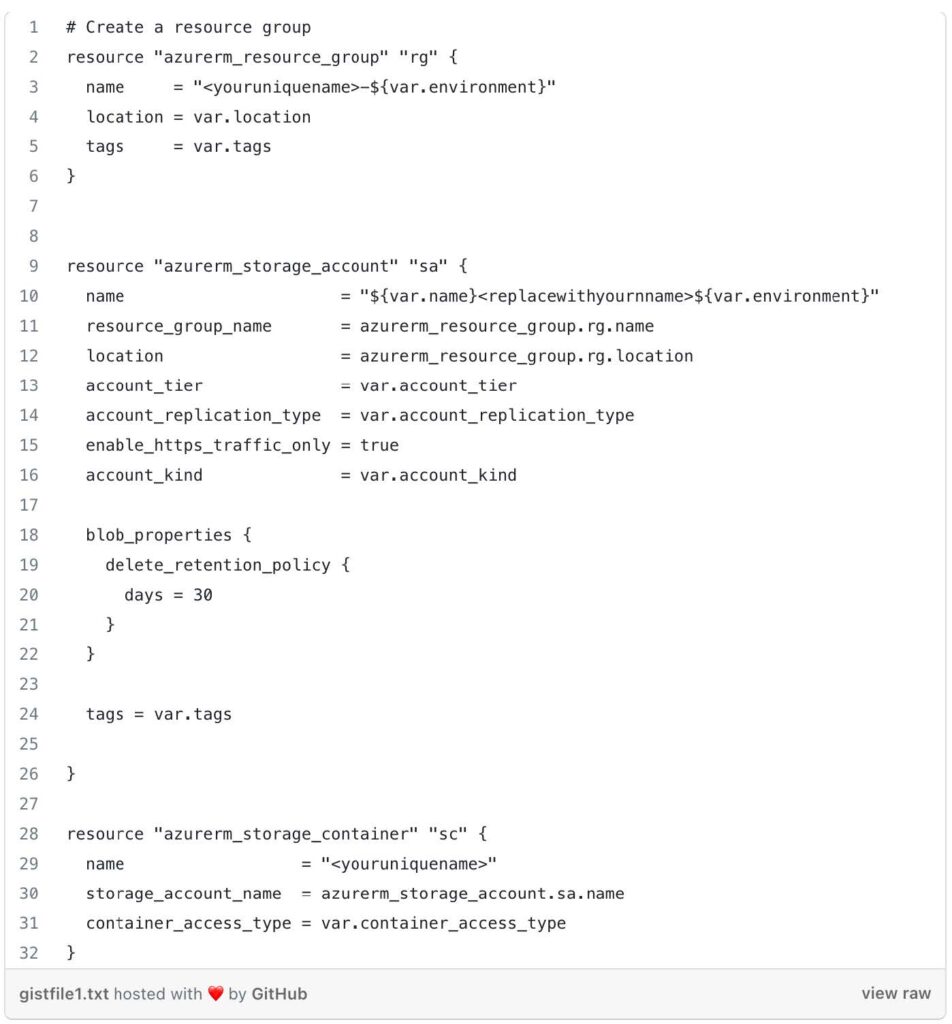

Let’s take a look at the resources needed to create our storage for our remote state within our child module located in terraform-aks/modules/0-remotestate/main.tf

We are creating the following:

Resource Group: A resource group is a logical container for grouping and managing related Azure resources.

Storage Account: Storage accounts are used to store and manage large amounts of unstructured data.

Storage Container: A storage container is a logical entity within a storage account that acts as a top-level directory for organizing and managing blobs. It provides a way to organize related data within a storage account.

We use the Terraform Azure provider (azurerm) to define a resource group (azurerm_resource_group) in Azure. It creates a resource group with a dynamically generated name, using a combination of <youruniquename> and the value of the var.environment variable. The resource group is assigned a location specified by the var.location variable, and tags specified by the var.tags variable.

The Azure storage account (azurerm_storage_account) block is use to create a storage account. The name attribute is set using a combination of <var.name>, a placeholder that should be replaced with your own name, and the value of the var.environment variable.

An Azure storage container (azurerm_storage_container) is defined. The name attribute specifies the name of the container using <youruniquename> placeholder, which should be replaced with your desired name.

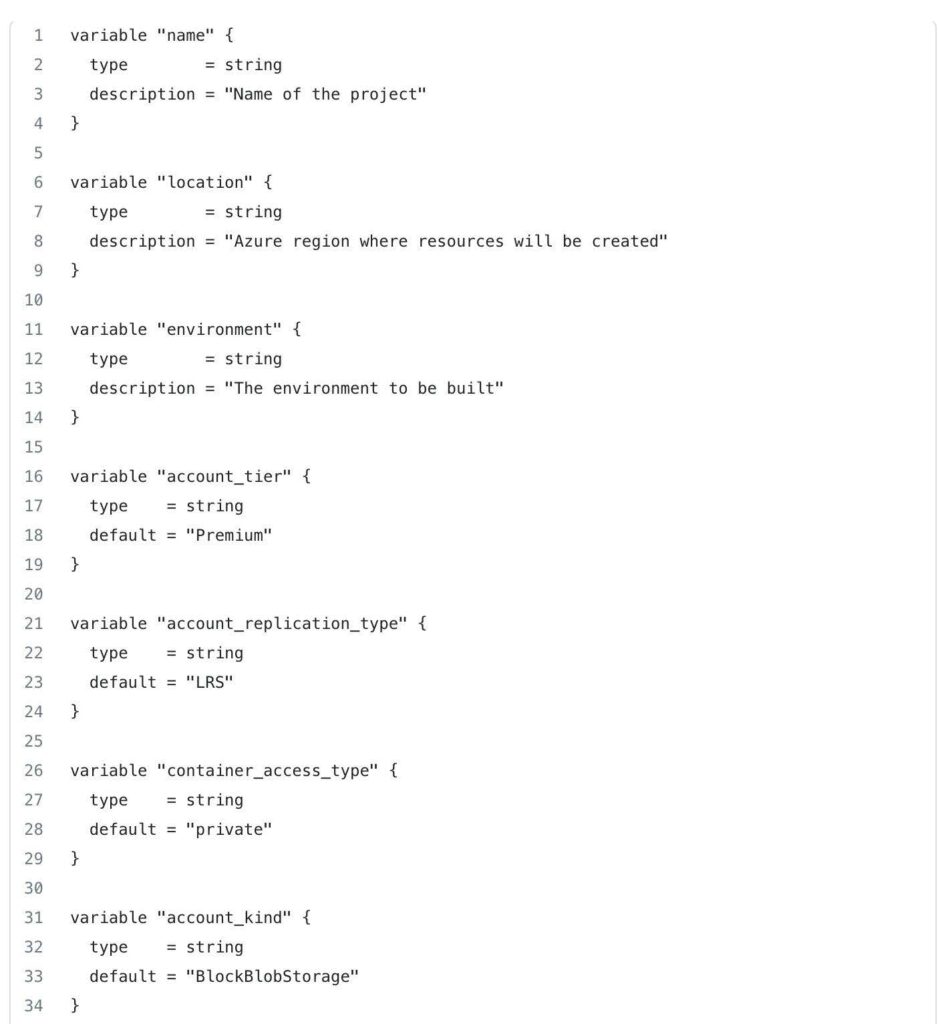

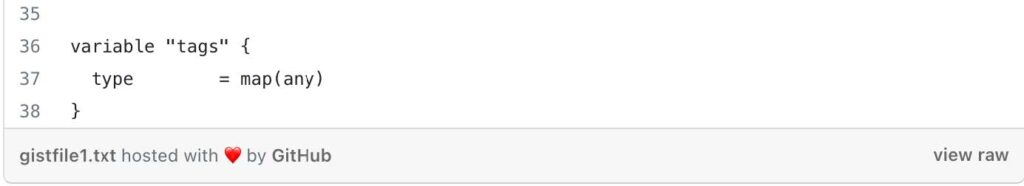

Next, let’s take a look at the variables inside terraform-aks/modules/0-remotestate/variables.tf

These variables provide flexibility and configurability to the 0-remotestate module, allowing you to customize various aspects of the resource provisioning process, such as names, locations, access types, and more, based on your specific requirements and preferences.

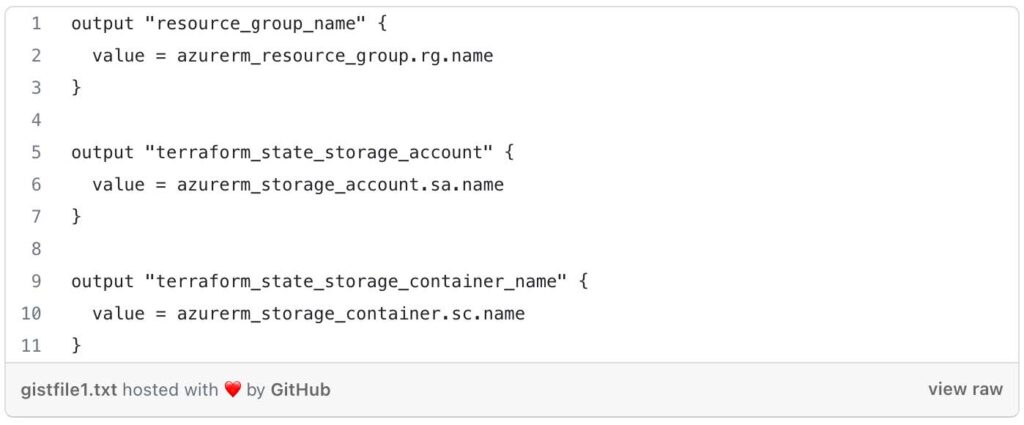

Next, lets take a look at the outputs located in terraform-aks/modules/0-remotestate/outputs.tf

By defining these outputs, the outputs.tf file allows you to capture and expose specific information about the created resource group, storage account, and container from the 0-remotestate module.

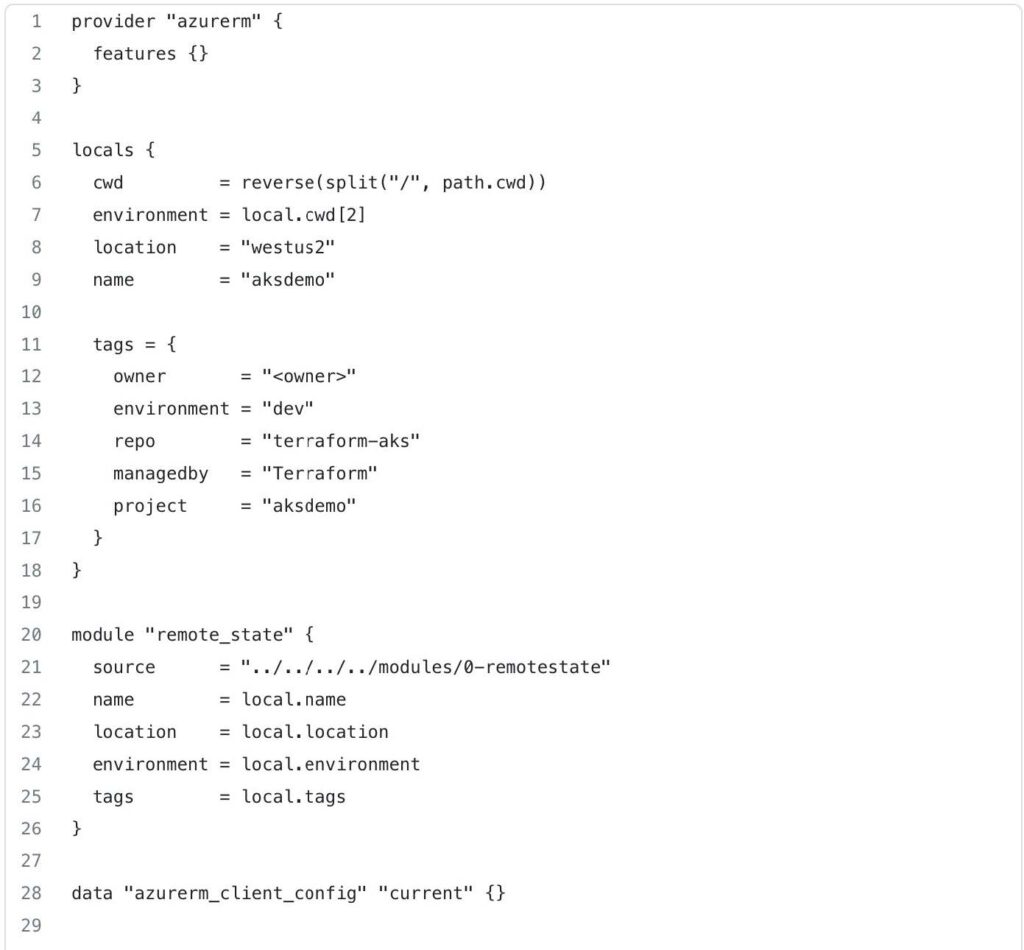

Let’s Navigate to our Root module located at terraform-aks/tf/dev/global/0-remotestate /main.tf

This code begins by defining the azurerm provider, which enables Terraform to interact with Azure resources. The features {} block is empty, indicating that no specific provider features are being enabled or configured in this case.

The locals block is used to define local variables. In this case, it defines the following variables:

cwd: This variable extracts the current working directory path, splits it by slashes ("/"), and then reverses the resulting list. This is done to extract specific values from the path.environment: This variable captures the third element from thecwdlist, representing the environment.location: This variable is set to the value"westus2", specifying the Azure region where resources will be deployed.name: This variable is set to the value"aksdemo", representing the name of the project or deployment.tags: This variable is a map that defines various tags for categorizing and organizing resources. The values within the map can be customized based on your specific needs.

This code block declares a module named remote_state and configures it to use the module located at ../../../../modules/0-remotestate. The source parameter specifies the relative path to the module. The remaining parameters (name, location, environment, and tags) are passed to the module as input variables, using values from the local variables defined earlier.

This code also, includes a data block to fetch the current Azure client configuration. This data is useful for authentication and obtaining access credentials when interacting with Azure resources.

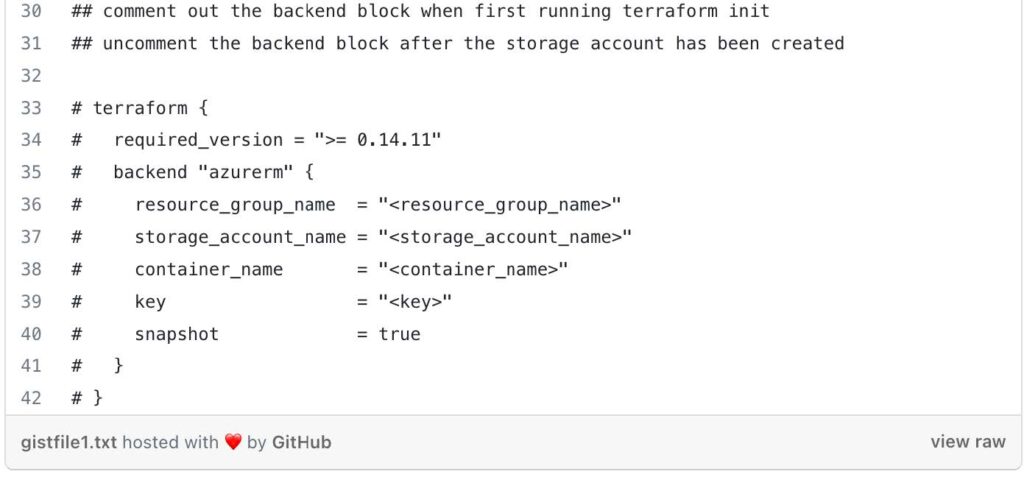

The commented out terraform block represents a backend configuration for storing Terraform state remotely. This block is typically uncommented after the necessary Azure resources (resource group, storage account, container, and key) are created. It allows you to configure remote state storage in Azure Blob Storage for better state management.

Now that we have set up the root module in the terraform-aks/tf/dev/global/0-remotestate/ directory, it's time to provision the necessary resources using the child module located at terraform-aks/modules/0-remotestate. The root module acts as the orchestrator, leveraging the functionalities and configurations defined within the child module to create the required infrastructure resources.

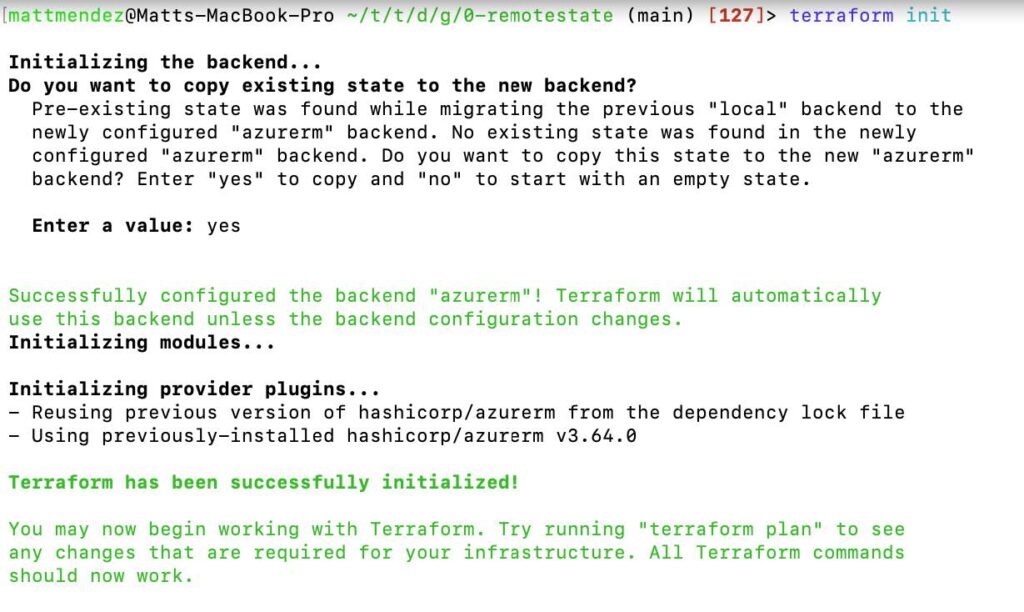

- From the directory: terraform-aks/dev/global/0-remotestate, with the backend commented out, run:

- Terraform init

- Terraform Plan

- Terraform apply

After executing terraform apply and successfully creating your storage resources, you can proceed to uncomment the backend block in the main.tf file. This block contains the configuration for storing your Terraform state remotely. Once uncommented, run another terraform init command to initialize the backend and store your state in the newly created storage account. This ensures secure and centralized management of your Terraform state, enabling collaborative development and simplified infrastructure updates.

Enter a value of yes when prompted.

This article details the process in Amazon Elastic Container Service to set up email notifications for stopped tasks.

Amazon Elastic Container Service (ECS)

Amazon Elastic Container Service (ECS) is a fully managed container orchestration service provided by AWS. It enables you to easily run and scale containerized applications in the cloud. ECS simplifies the deployment, management, and scaling of containers by abstracting away the underlying infrastructure.

An ECS task represents a logical unit of work and defines how containers are run within the service. A task can consist of one or more containers that are tightly coupled and need to be scheduled and managed together.

Amazon Simple Notification Service (SNS)

Amazon Simple Notification Service is a fully managed messaging service provided by AWS that enables you to send messages or notifications to various distributed recipients or subscribers. SNS simplifies the process of sending messages to a large number of subscribers, such as end users, applications, or other distributed systems, by handling the message distribution and delivery aspects.

Amazon EventBridge

Amazon EventBridge is a fully managed event bus service provided by AWS. It enables you to create and manage event-driven architectures by integrating and routing events from various sources to different target services. EventBridge acts as a central hub for event routing and allows decoupled and scalable communication between different components of your applications.

Get Started

This demo assumes you have a running ECS cluster.

1. Configure a SNS Topic.

- In the AWS console, navigate to Simple Notification Service

- Select create topic

- For type, choose standard

- Name: (choose name. e.g. TaskStoppedAlert)

- Leave the other settings at default, scroll down, and click create topic

2. Subscribe to the SNS topic you created.

- Select create subscription.

- Leave the topic ARN as default

- For protocol, select email

- For endpoint, enter a valid email address

- Click create subscription

3. Confirm the subscription.

- Open up the email that you entered, search for the AWS notifications email and click on the confirm subscription link provided in the email

- Verify that the subscription is confirmed

4. Create an Amazon EventBridge rule to trigger the SNS Topic when the state changes to stopped on an ECS Task

- Navigate to Amazon EventBridge in the AWS console

- Click create rule

- Name your rule (e.g. ecs-task-stop)

- For rule type, select rule with an event pattern

- For event source, choose AWS events or EventBridge partner events

- For creation method, choose custom pattern (JSON editor)

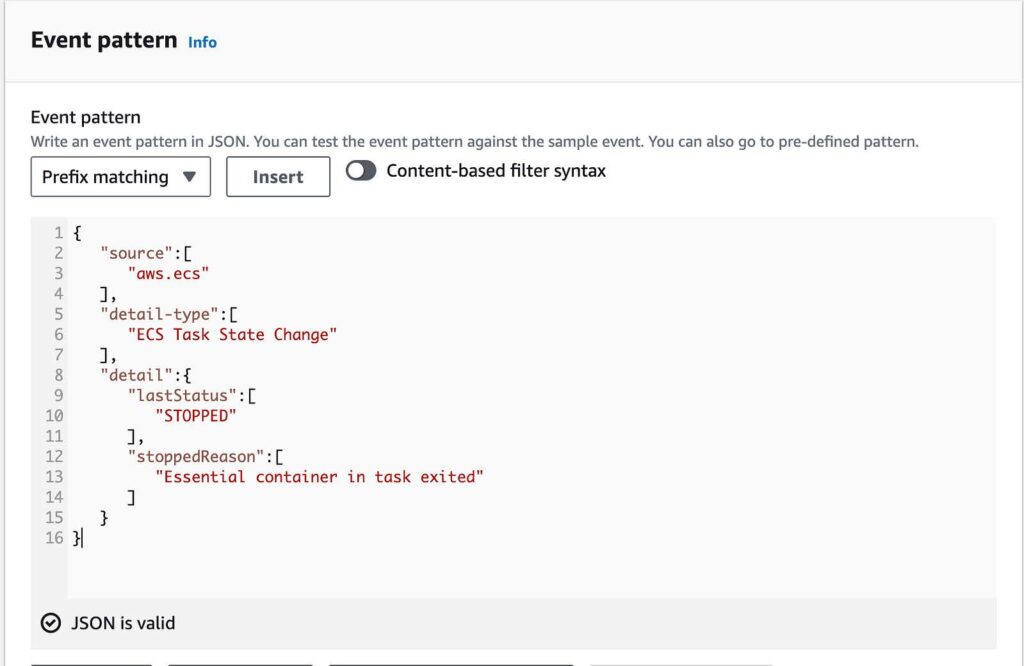

- Paste in the following code in the event pattern

{

“source”:[

“aws.ecs”

],

“detail-type”:[

“ECS Task State Change”

],

“detail”:{

“lastStatus”:[

“STOPPED”

],

“stoppedReason”:[

“Essential container in task exited”

]

}

}Below is an example of the code

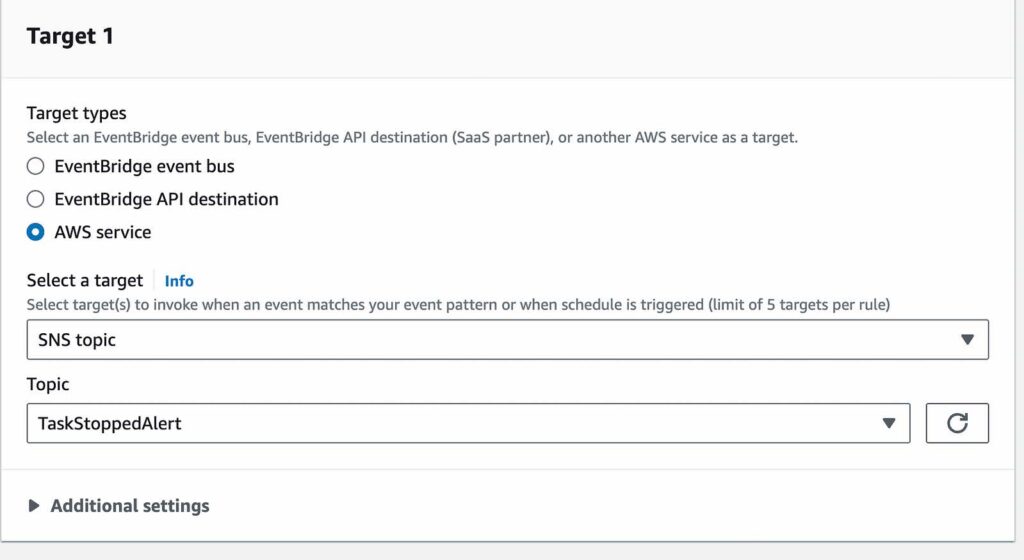

- For target types, select AWS service

- For target, select SNS Topic

- For topic, select the topic you created

- Leave the rest default and select create rule

5. Add permissions that enable EventBridge to publish SNS topics.

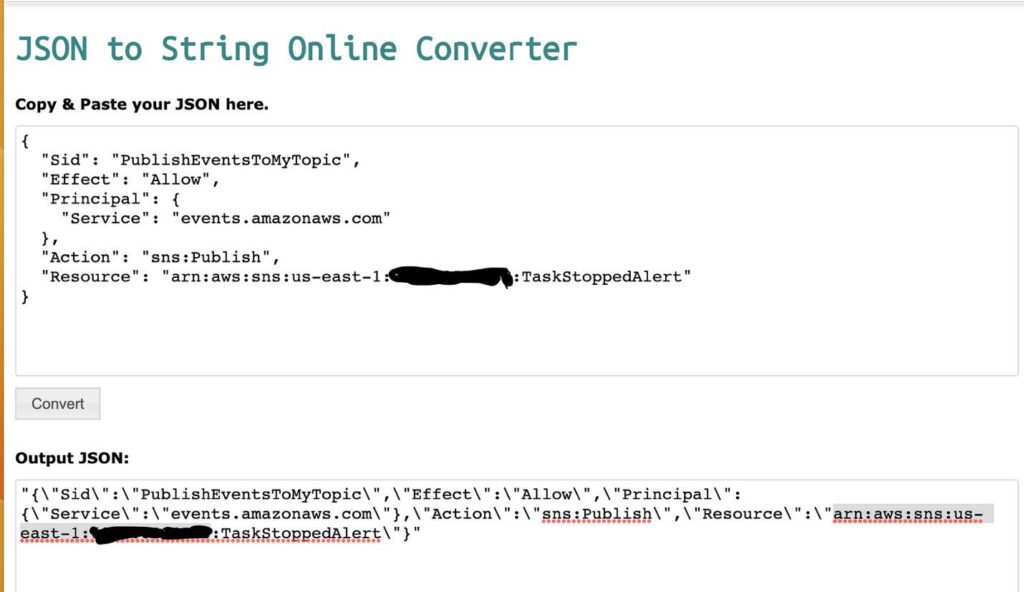

- Use a JSON converter to convert the following into a string. Click here for the link to a JSON converter.

{

“Sid”: “PublishEventsToMyTopic”,

“Effect”: “Allow”,

“Principal”: {

“Service”: “events.amazonaws.com”

},

“Action”: “sns:Publish”,

“Resource”: “arn:aws:sns:region:account-id:topic-name”

}Below, is an example provided on how to use the JSON converter with the above code.

- Add the string you created in the previous step to the “statement” collection inside the “policy” attribute

- Use the aws sns set-topic-attributes command to set the new policy.

aws sns set-topic-attributes — topic-arn “arn:aws:sns:region:account-id:topic-name” \

— attribute-name Policy \

— attribute-value Below is an example of how I used the AWS SNSset-topic-attribute command to set the new policy. This also contains the string I created using the JSON converter that adds the permissions.

- Verify the permissions were added with the

aws sns get-topic-attributes --topic-arncommand

6. Test your rule

Verify that the rule is working by running a task that exits shortly after it starts.

- Navigate to ECS in the AWS Console

- Choose task definitions

- Select create new task definition

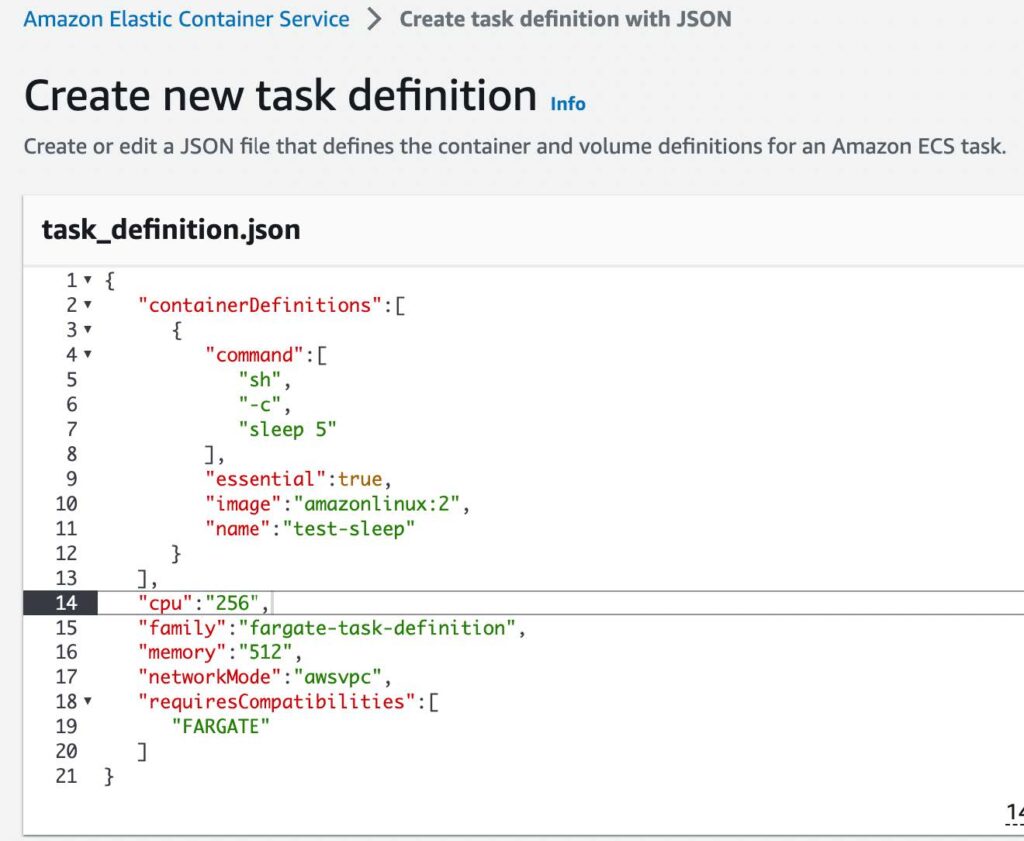

- Select create a new task definition with JSON

- In the JSON editor box, edit your JSON file, enter the following code into the editor

{

"containerDefinitions":[

{

"command":[

"sh",

"-c",

"sleep 5"

],

"essential":true,

"image":"amazonlinux:2",

"name":"test-sleep"

}

],

"cpu":"256",

"family":"fargate-task-definition",

"memory":"512",

"networkMode":"awsvpc",

"requiresCompatibilities":[

"FARGATE"

]

}Below is an example of how the code looks in the JSON editor

- Select create

7. Run the task.

- Navigate to ECS, select the cluster you want to run the test task in.

- Select Task, and choose run new task

- For Application type, choose Task

- For Task definition > Family > Choose fargate-task-definition

- Select the number of tasks you want to run. I chose one since this is a test task

- Select Create

8. Monitor the task.

If your event rule is configured correctly, you will receive an email message within a few minutes with the event text.

[post_title] => ECS: Setting Up Email Notifications For Stopped Tasks [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => ecs-setting-up-email-notifications-for-stopped-tasks [to_ping] => [pinged] => [post_modified] => 2023-06-30 14:22:20 [post_modified_gmt] => 2023-06-30 14:22:20 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=3940 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [3] => WP_Post Object ( [ID] => 3907 [post_author] => 7 [post_date] => 2023-07-03 10:23:00 [post_date_gmt] => 2023-07-03 10:23:00 [post_content] =>This article reviews the process to upgrade an Amazon DocumentDB cluster from version 4.0 to 5.0 with DMS.

Amazon DocumentDB

Amazon DocumentDB is a fully managed, NoSQL database service provided by AWS. It is compatible with MongoDB, which is a popular open-source document database. Amazon DocumentDB is designed to be highly scalable, reliable, and performant, making it suitable for applications that require low-latency and high-throughput database operations.

AWS Database Migration Service

AWS DMS simplifies the process of database migration by providing an efficient and reliable solution for moving databases to AWS or between different database engines. It supports a wide range of database sources, including on-premises databases, databases running on AWS, and databases hosted on other cloud platforms.

Get Started

This demo assumes you have an existing DocumentDB cluster with version 4.0.

1. Create a new DocumentDB cluster with version 5.0. Use this link to help you get started.

2. Authenticate to your Amazon DocumentDB cluster 4.0 using the mongo shell and execute the following commands:

db.adminCommand({modifyChangeStreams: 1,

database: "db_name",

collection: "",

enable: true});AWS DMS requires access to the cluster’s change streams.

3. Migrate your index’s with the Amazon DocumentDB Index Tool.

- First install PyMongo, click here

- Next, connect to Amazon DocumentDB using Python when TLS is enabled. Use this link to help you get started.

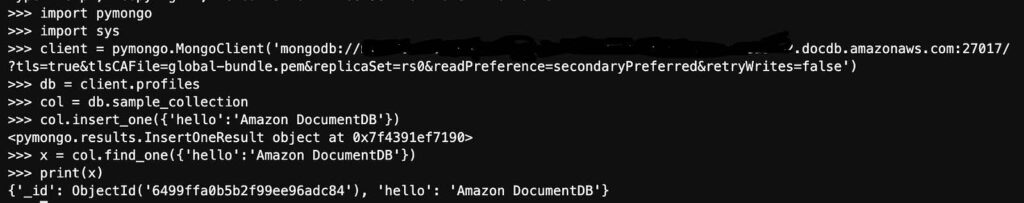

- Below is a sample of how I connected to my DocumentDB cluster 4.0 with python and imported some sample data

connection demonstration with hostname removed

- Install the Amazon DocumentDB Index Tool with this link

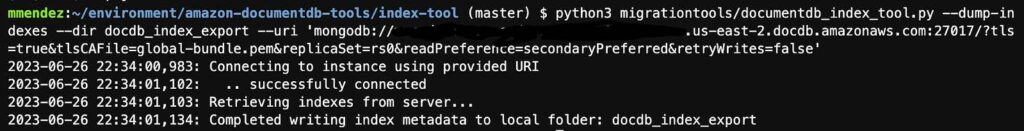

- Export indexes from source DocumentDB version 4.0 cluter [The image below is an example from my screen]

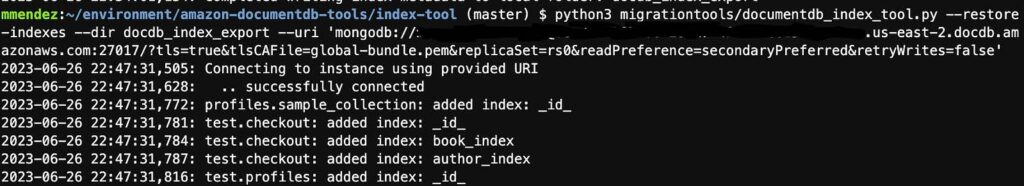

- Restore those indexes in your target Amazon DocumentDB version 5.0 cluster [The following image is an example of how to restore your indexes to your target cluster]

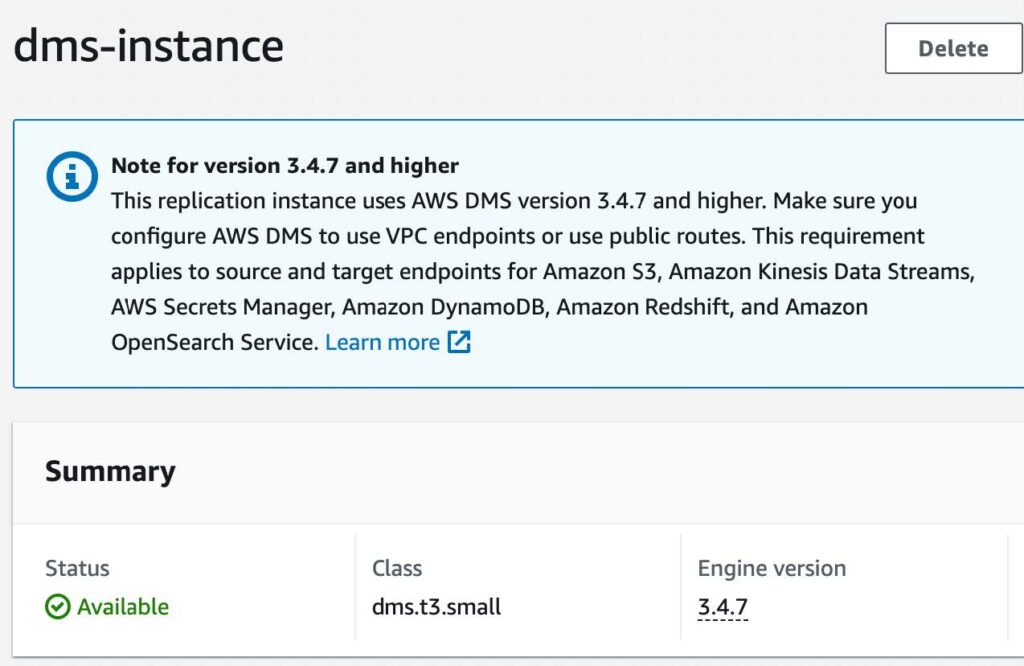

4. Create a replication instance.

- Navigate to Database Migration Service

- Select replication instances from left menu

- Click on the create replication instance button

- Name: (name of replication instance)

- Description: (description of instance)

- VPC: (use default or any specific VPC)

- For Multi AZ, select Dev or test workload (Single-AZ)

- Uncheck publicly accessible

- Click create

5. Update Security Groups.

- Copy the Private IP address of the new replication instance.

- Select the security group for your DocumentDB instances.

- Click edit inbound rules

- Click add rule

- Select TCP for type

- For port range select 27017

- For source, enter your replication instance’s private IP address you copied with a /32

- Save rules

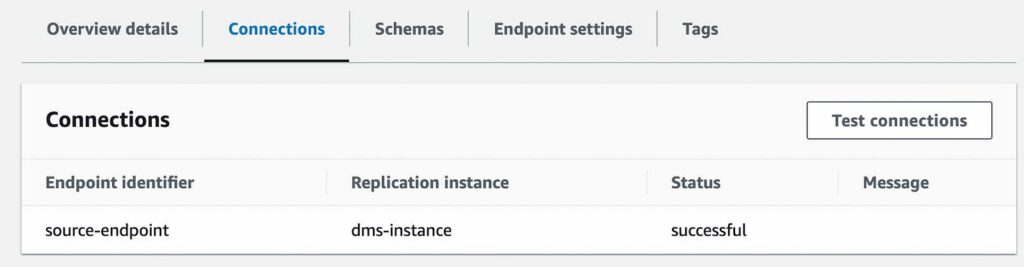

6. Create Source endpoint.

- Navigate back to DMS

- Select endpoints on left menu

- Click on the create endpoint button

- Select source endpoint

- Endpoint identifier: (name of the endpoint)

- For source engine, select Amazon DocumentDB

- For access to endpoint database, select provide access information manually

- Server name: (server name of DocumentDB cluster with version 4.0) e.g. source.cluster-hsyfhsia.region.docdb.amazonaws.com

- Port: 27017

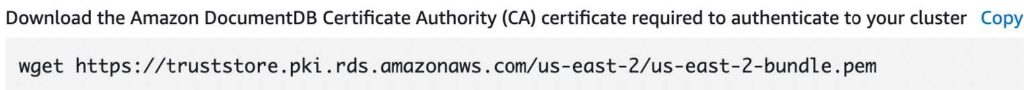

- For Secure Socket Layer (SSL) mode, select verify-full

- Click add new CA certificate

- Click choose file, and find and upload the Certificate Authority; this is the certificate used to connect to the cluster

- For certificate identifier, choose a name

- Click import certificate

- Enter in username and password used to connect to your DocumentDB cluster

- Enter in your database name

- Click create endpoint

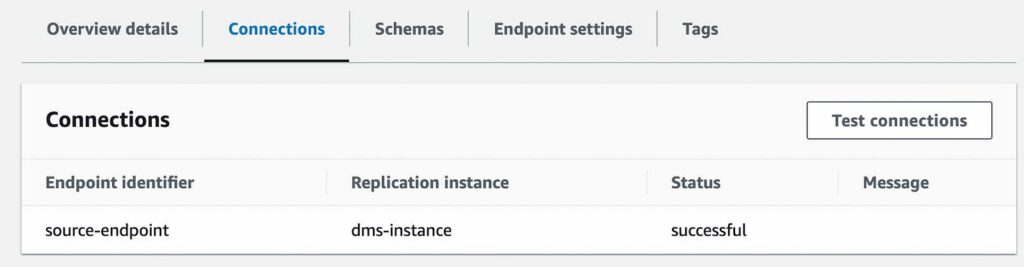

- Test your connection to verify it was successfully setup

7. Create Target Endpoint

- Repeat the steps above to configure your DocumentDB version 5.0 cluster as the Target Endpoint

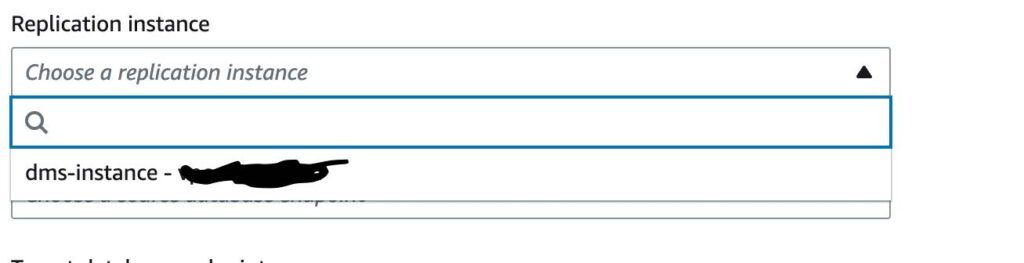

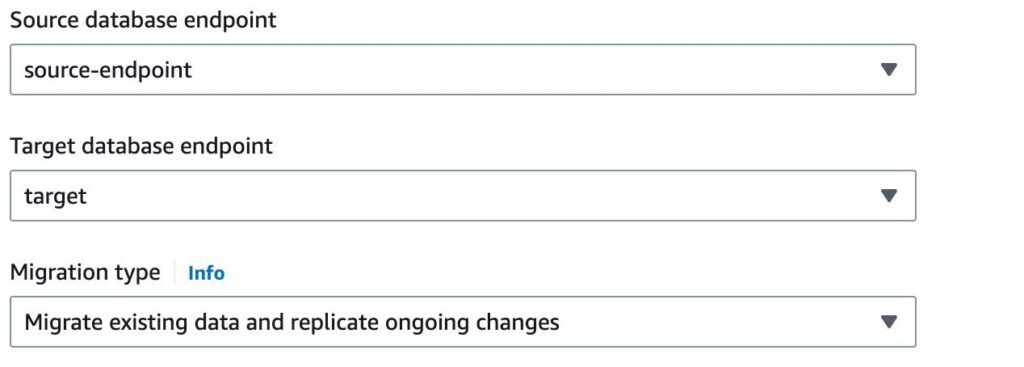

8. Create the Database Migration Task

- Click on the create task button

- Task identifier (name of task identifier)

- For replication instance, select the replication instance you created

- For source database endpoint, select your DocumentDB cluster version 4.0

- For target database endpoint, select your newly created DocumentDB cluster version 5.0

- For migration type, select Migrate Existing Data and Replicate Ongoing Changes

- For task settings, and under target table preparation mode, select do nothing

- For migration task startup configuration, choose automatically on create; this will automatically start the migration task once complete.

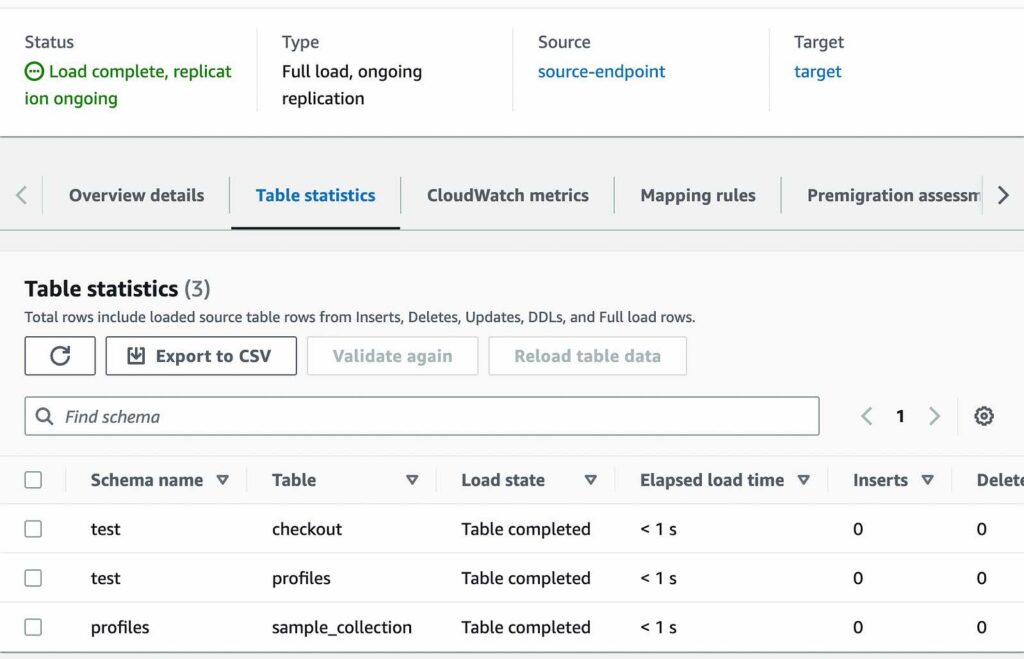

9. Monitor the migration task.

- Once the task has started, you can monitor the progress of Full Load and CDC replication on the individual collection with the Table Statistics tab

- AWS DMS has completed a full load migration of your source Amazon DocumentDB 4.0 cluster to your target Amazon DocumentDB 5.0 cluster and is now replicating change events

You are now ready to change your application’s database connection endpoint from your source Amazon DocumentDB 4.0 cluster to your target Amazon DocumentDB 5.0 cluster.

[post_title] => Upgrading an Amazon DocumentDB Cluster From Version 4.0 to 5.0 With DMS [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => upgrading-an-amazon-documentdb-cluster-from-version-4-0-to-5-0-with-dms [to_ping] => [pinged] => [post_modified] => 2023-06-30 14:03:23 [post_modified_gmt] => 2023-06-30 14:03:23 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=3907 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [4] => WP_Post Object ( [ID] => 3869 [post_author] => 7 [post_date] => 2023-06-29 17:46:26 [post_date_gmt] => 2023-06-29 17:46:26 [post_content] =>Automation is an essential aspect of modern operations, offering numerous benefits such as increased efficiency, reduced errors, and improved productivity. However, implementing automation without proper planning and strategy can lead to disappointing results and wasted resources. To ensure success, organizations need to follow a systematic approach.

At Keyva and Evolving Solutions, we work with an array of clients who range from being highly mature in their automation processes and tools to organizations that are just starting and need guidance to attain operational efficiencies. Across this spectrum, many organizations lack an overarching framework for automation.

To simplify the process, we have outlined the nine essential steps for implementing automation.

- Identify Goals. Begin by identifying your organization's goals and the specific areas where automation can have the most significant impact. Prioritize tasks / processes that are time-consuming, repetitive, and prone to errors.

- Analyze. Once you have identified the task / processes for automation, conduct a detailed analysis of each one. Understand the workflow, inputs, outputs, dependencies, and potential bottlenecks. This will help you determine the feasibility and potential benefits of automation.

- Select Automation Tools. Research the available automation tools and technologies, including open-source solutions, that fit your organization's needs. Consider factors such as ease of use, scalability, compatibility with existing systems, and vendor support.

- Comprehensive Implementation Plan. Create an implementation plan that outlines the timeline, milestones, and resources required for successful automation deployment. Consider potential risks and challenges and develop contingency plans. Assign responsibilities and ensure everyone is aligned with the objectives.

- Start Small. Get quick wins with simple projects that generate ROI. Each time you complete an initiative, evaluate, and learn from what you have done, and then move onto the next and then scale gradually.

- Collaboration. Automation should not be confined to a particular team or limited to a specific use case. To achieve widespread impact across the organization, initiatives should be approached as a collaborative effort that spans different domains and involves members from various teams. Any developed content should be shared across teams, and automation should be written in a manner that is easily comprehensible and modifiable by others.

- Training. Automation often introduces new tools and processes. You need to equip your team with the right education and training to ensure they have the knowledge and skills they need to ensure ongoing success of automation initiatives. In addition, it is important to provide ongoing support, documentation, and access to resources to address key questions.

- Monitor and Evaluate. Regularly analyze data and evaluate the performance of automated processes. Continuously iterate and refine your automation strategy based on the insights gain from measurement indicators such as efficiency gains, error reduction, cost savings, and stakeholder satisfaction.

- Repeat. Automation is not a one-time event but an ongoing journey. Regularly review and update your automation strategy to adapt to changing business needs and technological advancements.

Implementing automation in your organization can revolutionize your IT operations and drive significant benefits. By following the steps outlined above, you can ensure that your automation efforts are successful and aligned with your organization's objectives.

Let’s talk. If you would like to discuss how Keyva and Evolving Solutions can help you implement automation strategies can drive better business outcomes in your organization, contact us.

[post_title] => Mastering Automation: Nine Steps to Implementing Automation Effectively [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => mastering-automation [to_ping] => [pinged] => [post_modified] => 2023-06-28 18:53:56 [post_modified_gmt] => 2023-06-28 18:53:56 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=3869 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [5] => WP_Post Object ( [ID] => 3786 [post_author] => 7 [post_date] => 2023-06-27 08:30:00 [post_date_gmt] => 2023-06-27 08:30:00 [post_content] =>Keyva Chief Technology Officer Anuj Tuli discusses how DevSecOps allows security to be innately tied to the development and operational work being done by IT teams.

[post_title] => CTO Talks: DevSecOps - Security in a Digital Era is a Top Concern [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => cto-talks-devsecops-security-in-a-digital-era-is-a-top-concern [to_ping] => [pinged] => [post_modified] => 2024-05-15 19:41:48 [post_modified_gmt] => 2024-05-15 19:41:48 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=3782 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) ) [post_count] => 8 [current_post] => -1 [before_loop] => 1 [in_the_loop] => [post] => WP_Post Object ( [ID] => 3788 [post_author] => 7 [post_date] => 2023-07-20 08:30:00 [post_date_gmt] => 2023-07-20 08:30:00 [post_content] =>Keyva CTO Anuj Tuli discusses our expertise in developing point-to-point integrations.

[post_title] => CTO Talks: Integrations [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => cto-talks-integrations [to_ping] => [pinged] => [post_modified] => 2024-05-15 19:46:01 [post_modified_gmt] => 2024-05-15 19:46:01 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=3788 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [comment_count] => 0 [current_comment] => -1 [found_posts] => 134 [max_num_pages] => 17 [max_num_comment_pages] => 0 [is_single] => [is_preview] => [is_page] => [is_archive] => [is_date] => [is_year] => [is_month] => [is_day] => [is_time] => [is_author] => [is_category] => [is_tag] => [is_tax] => [is_search] => [is_feed] => [is_comment_feed] => [is_trackback] => [is_home] => 1 [is_privacy_policy] => [is_404] => [is_embed] => [is_paged] => 1 [is_admin] => [is_attachment] => [is_singular] => [is_robots] => [is_favicon] => [is_posts_page] => [is_post_type_archive] => [query_vars_hash:WP_Query:private] => da1509ff7b814c7c6643622f5999a798 [query_vars_changed:WP_Query:private] => [thumbnails_cached] => [allow_query_attachment_by_filename:protected] => [stopwords:WP_Query:private] => [compat_fields:WP_Query:private] => Array ( [0] => query_vars_hash [1] => query_vars_changed ) [compat_methods:WP_Query:private] => Array ( [0] => init_query_flags [1] => parse_tax_query ) [query_cache_key:WP_Query:private] => wp_query:bc12ba46efc8bdd2aae47246872def1b:0.77806200 1751740106 [tribe_is_event] => [tribe_is_multi_posttype] => [tribe_is_event_category] => [tribe_is_event_venue] => [tribe_is_event_organizer] => [tribe_is_event_query] => [tribe_is_past] => )