Get Appointment

- contact@wellinor.com

- +(123)-456-7890

Blog & Insights

- Home

- Blog & Insights

WP_Query Object

(

[query] => Array

(

[post_type] => post

[showposts] => 8

[orderby] => Array

(

[date] => desc

) [autosort] => 0

[paged] => 0

[post__not_in] => Array

(

[0] => 5039

) ) [query_vars] => Array

(

[post_type] => post

[showposts] => 8

[orderby] => Array

(

[date] => desc

) [autosort] => 0

[paged] => 0

[post__not_in] => Array

(

[0] => 5039

) [error] =>

[m] =>

[p] => 0

[post_parent] =>

[subpost] =>

[subpost_id] =>

[attachment] =>

[attachment_id] => 0

[name] =>

[pagename] =>

[page_id] => 0

[second] =>

[minute] =>

[hour] =>

[day] => 0

[monthnum] => 0

[year] => 0

[w] => 0

[category_name] =>

[tag] =>

[cat] =>

[tag_id] =>

[author] =>

[author_name] =>

[feed] =>

[tb] =>

[meta_key] =>

[meta_value] =>

[preview] =>

[s] =>

[sentence] =>

[title] =>

[fields] => all

[menu_order] =>

[embed] =>

[category__in] => Array

(

) [category__not_in] => Array

(

) [category__and] => Array

(

) [post__in] => Array

(

) [post_name__in] => Array

(

) [tag__in] => Array

(

) [tag__not_in] => Array

(

) [tag__and] => Array

(

) [tag_slug__in] => Array

(

) [tag_slug__and] => Array

(

) [post_parent__in] => Array

(

) [post_parent__not_in] => Array

(

) [author__in] => Array

(

) [author__not_in] => Array

(

) [search_columns] => Array

(

) [ignore_sticky_posts] =>

[suppress_filters] =>

[cache_results] => 1

[update_post_term_cache] => 1

[update_menu_item_cache] =>

[lazy_load_term_meta] => 1

[update_post_meta_cache] => 1

[posts_per_page] => 8

[nopaging] =>

[comments_per_page] => 50

[no_found_rows] =>

[order] => DESC

) [tax_query] => WP_Tax_Query Object

(

[queries] => Array

(

) [relation] => AND

[table_aliases:protected] => Array

(

) [queried_terms] => Array

(

) [primary_table] => wp_posts

[primary_id_column] => ID

) [meta_query] => WP_Meta_Query Object

(

[queries] => Array

(

) [relation] =>

[meta_table] =>

[meta_id_column] =>

[primary_table] =>

[primary_id_column] =>

[table_aliases:protected] => Array

(

) [clauses:protected] => Array

(

) [has_or_relation:protected] =>

) [date_query] =>

[request] => SELECT SQL_CALC_FOUND_ROWS wp_posts.ID

FROM wp_posts

WHERE 1=1 AND wp_posts.ID NOT IN (5039) AND ((wp_posts.post_type = 'post' AND (wp_posts.post_status = 'publish' OR wp_posts.post_status = 'expired' OR wp_posts.post_status = 'acf-disabled' OR wp_posts.post_status = 'tribe-ea-success' OR wp_posts.post_status = 'tribe-ea-failed' OR wp_posts.post_status = 'tribe-ea-schedule' OR wp_posts.post_status = 'tribe-ea-pending' OR wp_posts.post_status = 'tribe-ea-draft')))

ORDER BY wp_posts.post_date DESC

LIMIT 0, 8

[posts] => Array

(

[0] => WP_Post Object

(

[ID] => 5123

[post_author] => 7

[post_date] => 2025-07-08 16:21:14

[post_date_gmt] => 2025-07-08 16:21:14

[post_content] => Keyva is pleased to announce the certification of the Keyva BMC Atrium Data Pump and the Keyva HP uCMDB Data Pump for the new ServiceNow Yokohama release. Clients can now seamlessly upgrade their ServiceNow App from previous ServiceNow releases (Xanadu, Vancouver) to the Yokohama release. The ServiceNow Yokohama release delivers enhanced AI-driven workflows, improved user experiences, and expanded automation capabilities to increase productivity, resilience, and service efficiency across the enterprise. Keyva’s BMC Atrium™ CMDB Data Pump provides synchronization of the CIs, CI attributes and relationships between the ServiceNow CMDB and the BMC Atrium™ CMDB systems. Keyva’s HP Universal CMDB Data Pump provides synchronization of the CIs, CI attributes and relationships between the ServiceNow CMDB and the HP Universal CMDB systems. Both integrations allow organizations to leverage their existing investment in Enterprise Software and avoid costly "Rip and Replace" projects. Learn more about the Keyva ServiceNow Integrations Hub for CMDB products and view all the ServiceNow releases for which Keyva has been certified at the ServiceNow store, visit http://bit.ly/4lnWjBU.

[post_title] => Keyva ServiceNow Integrations for CMDB Data Pump Certified for Yokohama Release

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => keyva-servicenow-integrations-for-cmdb-data-pump-certified-for-yokohama-release

[to_ping] =>

[pinged] =>

[post_modified] => 2025-07-09 12:42:51

[post_modified_gmt] => 2025-07-09 12:42:51

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5123

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [1] => WP_Post Object

(

[ID] => 5117

[post_author] => 7

[post_date] => 2025-06-17 14:56:29

[post_date_gmt] => 2025-06-17 14:56:29

[post_content] => In today’s fast-paced digital landscape, organizations face a delicate balancing act that many organizations must contend with when it comes to IT service management. It is a dynamic tug-of-war between innovation and stability. On one hand, businesses must sprint to adapt as they embrace rapid evolution to stay competitive or risk fading into obsolescence. On the other, they must anchor themselves in control and stability or else things quickly run amuck fairly quickly. Installation is easy and available for Windows and Linux operating systems. It uses JDK (Java Development Kit). An incident can be created manually through the Event Management console for testing purposes. Multiple versions of BMC Remedy/BEM/TSOM/BHOM, ServiceNow, Microsoft SCOM/SCCM, MicroFocus MFSM/NNMi/ArcSight ESM products are already supported. Event Integration runs as a service or daemon and is easy to troubleshoot from it's logs. Individual events can be traced to the corresponding incidents using a correlation id or system id. Numerous high-profile clients across the globe are already benefitting from the seamless automation achieved by Event Integration. No major bug fixes have been asked for since the past over 20 years that it has been serving customers. It is a very stable product. We welcome enhancement requests. Recall the water hauling bucket by bucket analogy I gave earlier. You can now cut the manual monotonous labor in your IT operations effectively. If you have an Event Management system and an Incident Management system that you would like to integrate or if you have unwieldy application logs that you want to automate deciphering to act upon, let’s connect. Contact us today. [table id=10 /]

[post_title] => The Role of Event Integration in IT Service Management

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => the-role-of-event-integration-in-it-service-management

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-05 18:45:22

[post_modified_gmt] => 2025-06-05 18:45:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5032

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [3] => WP_Post Object

(

[ID] => 5109

[post_author] => 15

[post_date] => 2025-06-09 01:05:26

[post_date_gmt] => 2025-06-09 01:05:26

[post_content] => Our client was struggling with the complexities of securing, managing, and optimizing its AWS environment. As part of their modernization efforts, they wanted to adopt DevOps methodologies, including infrastructure as code and native AWS security features. In addition, they needed to migrate their containerized applications from on-premises systems to AWS Elastic Kubernetes Service (EKS), adding another layer of complexity.

[post_title] => Case Study: AWS Cloud Migration and DevOps

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => case-study-aws-cloud-migration-and-devops

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 15:53:22

[post_modified_gmt] => 2025-06-09 15:53:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5109

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [4] => WP_Post Object

(

[ID] => 5099

[post_author] => 15

[post_date] => 2025-06-04 13:37:35

[post_date_gmt] => 2025-06-04 13:37:35

[post_content] => Monitoring has long been a staple of the IT toolset. But as IT leaders become more focused on delivering measurable business value, the limitations of simple monitoring are becoming more apparent. Traditional monitoring is reactive by nature and misses the nuances that can provide proactive indications that the environment is headed toward a problem. Alerts from one system don’t always indicate a root cause lurking in another system, oftentimes leading to a Whack-A-Mole approach where a fix to one system causes issues in another. This can lead to an environment where teams focus more working to absolve their domain as the source of an issue, rather than a quick mean time to repair (MTTR). The reality is simple: You can’t fix what you don’t see. And you certainly can’t improve it. Observability takes simple monitoring to a new level where issues are triangulated among systems to show the true chain of cause and effect throughout an IT stack. Done right, observability can surface leading indicators of risk, letting IT teams proactively address issues before they threaten to impact the business. It helps with correlation of events and causes of those events. Observability helps you see the big picture, understand what's really going on across your systems, and get ahead of potential problems to ensure IT continues to deliver its intended business value. Unified observability helps IT teams reduce risk, make informed decisions faster, and helps the business stay competitive. A highly mature observability program can even be a competitive advantage. Observability goes beyond toolsets and encompasses a new way of thinking about how to integrate and use the monitoring tools you already have. The shift is more about strategy and less about tools and technologies.

Installation is easy and available for Windows and Linux operating systems. It uses JDK (Java Development Kit). An incident can be created manually through the Event Management console for testing purposes. Multiple versions of BMC Remedy/BEM/TSOM/BHOM, ServiceNow, Microsoft SCOM/SCCM, MicroFocus MFSM/NNMi/ArcSight ESM products are already supported. Event Integration runs as a service or daemon and is easy to troubleshoot from it's logs. Individual events can be traced to the corresponding incidents using a correlation id or system id. Numerous high-profile clients across the globe are already benefitting from the seamless automation achieved by Event Integration. No major bug fixes have been asked for since the past over 20 years that it has been serving customers. It is a very stable product. We welcome enhancement requests. Recall the water hauling bucket by bucket analogy I gave earlier. You can now cut the manual monotonous labor in your IT operations effectively. If you have an Event Management system and an Incident Management system that you would like to integrate or if you have unwieldy application logs that you want to automate deciphering to act upon, let’s connect. Contact us today. [table id=10 /]

[post_title] => The Role of Event Integration in IT Service Management

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => the-role-of-event-integration-in-it-service-management

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-05 18:45:22

[post_modified_gmt] => 2025-06-05 18:45:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5032

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [3] => WP_Post Object

(

[ID] => 5109

[post_author] => 15

[post_date] => 2025-06-09 01:05:26

[post_date_gmt] => 2025-06-09 01:05:26

[post_content] => Our client was struggling with the complexities of securing, managing, and optimizing its AWS environment. As part of their modernization efforts, they wanted to adopt DevOps methodologies, including infrastructure as code and native AWS security features. In addition, they needed to migrate their containerized applications from on-premises systems to AWS Elastic Kubernetes Service (EKS), adding another layer of complexity.

[post_title] => Case Study: AWS Cloud Migration and DevOps

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => case-study-aws-cloud-migration-and-devops

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 15:53:22

[post_modified_gmt] => 2025-06-09 15:53:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5109

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [4] => WP_Post Object

(

[ID] => 5099

[post_author] => 15

[post_date] => 2025-06-04 13:37:35

[post_date_gmt] => 2025-06-04 13:37:35

[post_content] => Monitoring has long been a staple of the IT toolset. But as IT leaders become more focused on delivering measurable business value, the limitations of simple monitoring are becoming more apparent. Traditional monitoring is reactive by nature and misses the nuances that can provide proactive indications that the environment is headed toward a problem. Alerts from one system don’t always indicate a root cause lurking in another system, oftentimes leading to a Whack-A-Mole approach where a fix to one system causes issues in another. This can lead to an environment where teams focus more working to absolve their domain as the source of an issue, rather than a quick mean time to repair (MTTR). The reality is simple: You can’t fix what you don’t see. And you certainly can’t improve it. Observability takes simple monitoring to a new level where issues are triangulated among systems to show the true chain of cause and effect throughout an IT stack. Done right, observability can surface leading indicators of risk, letting IT teams proactively address issues before they threaten to impact the business. It helps with correlation of events and causes of those events. Observability helps you see the big picture, understand what's really going on across your systems, and get ahead of potential problems to ensure IT continues to deliver its intended business value. Unified observability helps IT teams reduce risk, make informed decisions faster, and helps the business stay competitive. A highly mature observability program can even be a competitive advantage. Observability goes beyond toolsets and encompasses a new way of thinking about how to integrate and use the monitoring tools you already have. The shift is more about strategy and less about tools and technologies. Source: https://github.com/cilium/cilium/blob/main/Documentation/images/cilium-overview.png[/caption]

Source: https://github.com/cilium/cilium/blob/main/Documentation/images/cilium-overview.png[/caption]  Source: https://github.com/cilium/hubble/blob/main/Documentation/images/hubble_arch.png[/caption]

Source: https://github.com/cilium/hubble/blob/main/Documentation/images/hubble_arch.png[/caption]

What is ITIL?

ITIL stands for Information Technology Infrastructure Library, which serves as a structured framework for managing IT services, emphasizing processes, governance, and risk management. ITIL serves as the voice of reason and discipline, the steady hand that ensures that IT operations remain reliable, compliant, and tightly aligned with business goals.The Contrasting Nature of Agile

Agile is the framework that emphasizes flexibility and agility to rapidly adapt to changes and evolving software. Agile methodology is concerned with driving the rapid creation and refinement of software products. It champions creativity and collaboration to deliver incremental value through relentless sprints. It is an approach that is a necessity when adapting generative AI or other emerging technologies.The Great Question for Technology Driven Organizations

Here lies the challenge. How do you balance risk yet remain fast-moving at the same time. This is one of the many questions that business leaders find themselves asking.- How do you ensure the continuous delivery of new patches and enhancements while also safeguarding your operations in terms of efficiency and compliance?

- How do you combine the governance of ITIL with the advancement of your products?

- How do you combine the compliance needs of ITIL or auditing with the relentless pursuit of innovation?

The Struggle is Real

Both these frameworks are based on contrasting cultures. While Agile is fast-moving, focused on rapid innovation and adaptation, ITIL moves at a measured pace that emphasizes compliance, stability, auditability, and process control. Their differences are visible in other ways too:- They are also typically used by different teams with distinct responsibilities. ITIL is often the domain of operations, while Agile is embraced by development teams.

- ITIL is prescriptive and operates on an SLA based approach, while Agile methodology is adaptive and works on an iterative approach.

- Each framework is measured differently. Agile teams focus on velocity indicators like development speed, feature delivery rates, and sprint completion. ITIL managers emphasize predictability metrics such as service uptime, incident resolution times, and change success rates.

Keyva's Bridge-Building Approach

Keyva recognizes that the solution isn't about choosing sides. It is about creating a unified model that utilizes both frameworks to create business value across the board. We help organizations recognize that IT value creation happens in the Agile space, while sustainable change management lives in the ITIL domain. By creating standardized models that translate business value across both frameworks, we enable companies to innovate rapidly without sacrificing operational excellence. This allows our clients to still move swiftly to seize opportunity, while being able to sleep at night knowing that everything is orderly and compliant. Our solutions serve as catalysts for organizational transformation by facilitating knowledge transfer across departments and uniting previously siloed teams. Through a comprehensive suite of strategic integrations, we enable organizations to harness Agile methodologies for rapid IT value creation while simultaneously leveraging ITIL frameworks to drive cultural change and structural adaptation throughout the enterprise.A Solution Example

Here is a great example of how Keyva is helping companies merge the contrasting styles of ITIL and Agile into a workable and collaborative approach. The change management process of ITIL is often perceived as slow and bureaucratic, especially when changes must pass through a Change Advisory Board (CAB) for approval. This causes production delays as agile teams wait around for approvals to enact their changes. Keyva addresses this challenge by introducing automation and risk-based decision-making into the process. We work with our clients to define clear risk metrics that we then use to categorize changes based on their potential impact. Low-risk changes can be approved automatically, while only those with a high-risk profile require manual review or further testing. This approach ensures that routine or minor updates move forward quickly, while still maintaining the necessary governance and documentation for compliance and historical records. This solution essentially solves both sides of the equation. Agile teams can still sprint much of the time while ITIL personnel know that risk is being addressed properly.A Master of Both

What makes Keyva so effective at helping their customers attain the proper balance between speed and governance is that we have skills and expertise in both frameworks. That allows us to support them at both strategic and tactical levels. Strategically, we guide organizations in aligning these frameworks to their business goals. On a tactical level, we implement a wide range of integrations and offer tools designed to follow best practices for both ITIL and Agile. By leveraging our vast knowledge accumulated from countless integrations, we can help lead cultural and structural transformations your organization needs to thrive in this digitally transformed world. We'll demonstrate how to streamline your processes and provide comprehensive training on both frameworks to ensure that your teams amplify each other's strengths rather than working at cross-purposes. Let us help you transform framework friction into competitive advantage. Contact Keyva today to unite your development speed with operational excellence. [post_title] => How Keyva Bridges the Divide to Unite ITIL and Agile for Success [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => how-keyva-bridges-the-divide-to-unite-itil-and-agile-for-success [to_ping] => [pinged] => [post_modified] => 2025-06-17 14:56:29 [post_modified_gmt] => 2025-06-17 14:56:29 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=5117 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [2] => WP_Post Object ( [ID] => 5032 [post_author] => 15 [post_date] => 2025-06-16 01:00:29 [post_date_gmt] => 2025-06-16 01:00:29 [post_content] => Imagine having to haul water bucket by bucket into your home from the river, for utilitarian purposes. You get up in the morning, pick the bucket, walk all the way half asleep to the river, fill up your bucket, feel happy at the sound of water, and happily bring it back home. Then that bucket of water is over within 10 minutes when you take a shower. Now you need water to drink. So, you pick up that bucket again and do the same thing over again. This time you feel happy to get the exercise but wish there was a time saving way. Maybe you could hire someone to do the same monotonous picking-the-bucket and bringing-the-filled-one-back job over and over throughout the day, at your beck and call if you hired them for 24x7. Maybe you could build a pipeline but that would take hours of your off-work time, for a year almost. You are weary at the prospect of loss of family time. And you don’t want the neighbors to benefit from the pipeline because they will likely not put in the work with you. Now imagine a similar scenario at work, seeing error logs from your application servers - all kinds of errors, in your production servers that serve thousands of customers. You have production support team set up to observe these logs and pick out the ones that are precious for your business - the ones that need to be acted upon. There are a lot of them because your customers are diverse, and they seem to love to expose the bugs in your application (inadvertently!). Some of the logs are just asking for maintenance such as a file system having filled up in memory and needing to be cleaned up. If the production support personnel thinks deep enough, they will create a ticket (in Jira or ServiceNow or whatever your means of communication with your L2 support or development team) and say that the application needs to develop some smarts and clean up unnecessary files from memory when it fills up to these many GBs. Some logs plain expose your customers' human errors such as - they deleted a file by mistake in the installation folders and your application expects it; or a certain format of data is expected in a file in the installation and your customer’s team messed it up while editing it manually; or maybe your customer entered a bad password the legendary 3 times and are now locked out of the application. Some logs may indicate errors by your customer support personnel during a customer support call, such as asking them to modify a file in the installation folders and that update did not work well with your application. And then there’s the sea of logs that mean well - they say your application is running well; they just outline the various steps your development team wants to know about in the dreaded circumstance of an error happening for an end customer. Mind you, the logs are helpful - not necessarily troublesome. They help the business react to current and changing needs of your customers. So you decide to implement an event management application such as BMC Event Manager (BEM) or BMC Helix Operations Management (BHOM) or Microsoft Systems Center Operations Manager (SCOM) so that you can direct all logs to events that are easy to view, filter and act upon. You can filter events by type, by time window and more. This way you can make more sense of them. If an event, like a file not existing, has occurred 10 times for different customers in a few hours, maybe there is a bug that the application development team needs to figure and fix. If a certain event such as incorrect database query has fired for only one customer 10 times in a few hours, the customer might need to call your L1 support and get their query corrected or troubleshoot what is wrong. If there are a number of heartbeat OK events, that means application servers are responding to customers fine. If out of 10 servers, 8 are sending heartbeat OK and 2 are sending connection failed, you need to either look at those 2 on-prem servers, or figure why your autoscaling in your cloud did not replace these servers automatically. There are a variety of actions you may want to take based on what your logs are saying, at a different depth of analysis of the events, possibly in different contexts held by different stakeholders. Maybe these events will be of different value to different teams such as your CTO, DevOps team, application development team, product managers, project managers, etc. On another side of things, your application development and devOps teams have been getting swayed by random ad hoc requests coming from various stakeholders. Your L1 production support teams ask your development team to fix a recurring bug with multiple customers. Your CTO asks your application development team to create a graphical metric of how many unique customers were served in the past few months. Your product owner asks the devOps team to spin up 2 additional servers to serve more customers as customers have been experiencing latency in your application; business is growing. All these additional wants arise based on the events in your Event Management system. So, your architectural leadership decides to add an additional piece in your ecosystem - an incident management system such as ServiceNow. This way your L1 support team, your CTO, your product owner and others create incidents for your application development team and your devOps teams to pick up and implement according to a certain decided priority associated with the incident. This surely increases the efficiency of your on-ground teams. But now, your stakeholders have to parse the events to create incidents in your Incident Management system and they also have to ensure that duplicate incidents are not created. This can be accomplished by an integration that can automate incident creation for at least most of the logs or events. Keyva team presents to you, an Event Integration product. Event Integration product is ready to use and customize for your particular use case. If you were to build a middleware that would do this, it would take you a year or more, employing a bunch of developers, product owner, project manager and testers. If you want to deploy a solution in under an hour, Keyva’s Event Integration can help. Event Integration automates creation of meaningful incidents from your Event Management system. You can customize filters that club events together (such as heartbeat OK) to be logged into an existing incident so that it does not create a clutter of useless tickets. You can also customize it to ignore events that are not useful to the business. Event Integration can enable 2-way communication between your Event Manager and Incident Manager. When an incident is closed at the Incident Manager end, that can be notified to the Event Manager to close all the relevant events as well. You can deploy a MID (Management, Instrumentation, and Discovery) server of ServiceNow to ensure security and encryption of all communication to and from ServiceNow (Incident Management system). No additional hardware or software training is required to use Event Integration product. We work closely with you to get your Event Integration running and functional within an hour. Keyva’s software support team is available over email, phone and live sessions to fix issues you may face in the future or answer any questions you may have. Licensing of the product is easy to procure for temporary usage or long-term permanent usage. Configuration of mapping between particular fields of TSOM (BMC TrueSight Operations Manager) or any other source event manager and ServiceNow or any other target incident manager is possible. New incident table fields can be created and associated with fields related to events. That way, incidents created by the Integration make most sense to the team at the receiving end. Event fields can be transformed to certain values in the incident, using transform mapping functions. For example, CRITICAL status of event can be mapped to let’s say number 1 in incident status. Multiple functions such as $SUBSTRING and $LOOKUP are available for accurate customizations, exactly how your business needs it. Here’s how the BEM and bem2snow Event Integration log look: Installation is easy and available for Windows and Linux operating systems. It uses JDK (Java Development Kit). An incident can be created manually through the Event Management console for testing purposes. Multiple versions of BMC Remedy/BEM/TSOM/BHOM, ServiceNow, Microsoft SCOM/SCCM, MicroFocus MFSM/NNMi/ArcSight ESM products are already supported. Event Integration runs as a service or daemon and is easy to troubleshoot from it's logs. Individual events can be traced to the corresponding incidents using a correlation id or system id. Numerous high-profile clients across the globe are already benefitting from the seamless automation achieved by Event Integration. No major bug fixes have been asked for since the past over 20 years that it has been serving customers. It is a very stable product. We welcome enhancement requests. Recall the water hauling bucket by bucket analogy I gave earlier. You can now cut the manual monotonous labor in your IT operations effectively. If you have an Event Management system and an Incident Management system that you would like to integrate or if you have unwieldy application logs that you want to automate deciphering to act upon, let’s connect. Contact us today. [table id=10 /]

[post_title] => The Role of Event Integration in IT Service Management

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => the-role-of-event-integration-in-it-service-management

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-05 18:45:22

[post_modified_gmt] => 2025-06-05 18:45:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5032

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [3] => WP_Post Object

(

[ID] => 5109

[post_author] => 15

[post_date] => 2025-06-09 01:05:26

[post_date_gmt] => 2025-06-09 01:05:26

[post_content] => Our client was struggling with the complexities of securing, managing, and optimizing its AWS environment. As part of their modernization efforts, they wanted to adopt DevOps methodologies, including infrastructure as code and native AWS security features. In addition, they needed to migrate their containerized applications from on-premises systems to AWS Elastic Kubernetes Service (EKS), adding another layer of complexity.

[post_title] => Case Study: AWS Cloud Migration and DevOps

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => case-study-aws-cloud-migration-and-devops

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 15:53:22

[post_modified_gmt] => 2025-06-09 15:53:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5109

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [4] => WP_Post Object

(

[ID] => 5099

[post_author] => 15

[post_date] => 2025-06-04 13:37:35

[post_date_gmt] => 2025-06-04 13:37:35

[post_content] => Monitoring has long been a staple of the IT toolset. But as IT leaders become more focused on delivering measurable business value, the limitations of simple monitoring are becoming more apparent. Traditional monitoring is reactive by nature and misses the nuances that can provide proactive indications that the environment is headed toward a problem. Alerts from one system don’t always indicate a root cause lurking in another system, oftentimes leading to a Whack-A-Mole approach where a fix to one system causes issues in another. This can lead to an environment where teams focus more working to absolve their domain as the source of an issue, rather than a quick mean time to repair (MTTR). The reality is simple: You can’t fix what you don’t see. And you certainly can’t improve it. Observability takes simple monitoring to a new level where issues are triangulated among systems to show the true chain of cause and effect throughout an IT stack. Done right, observability can surface leading indicators of risk, letting IT teams proactively address issues before they threaten to impact the business. It helps with correlation of events and causes of those events. Observability helps you see the big picture, understand what's really going on across your systems, and get ahead of potential problems to ensure IT continues to deliver its intended business value. Unified observability helps IT teams reduce risk, make informed decisions faster, and helps the business stay competitive. A highly mature observability program can even be a competitive advantage. Observability goes beyond toolsets and encompasses a new way of thinking about how to integrate and use the monitoring tools you already have. The shift is more about strategy and less about tools and technologies.

Installation is easy and available for Windows and Linux operating systems. It uses JDK (Java Development Kit). An incident can be created manually through the Event Management console for testing purposes. Multiple versions of BMC Remedy/BEM/TSOM/BHOM, ServiceNow, Microsoft SCOM/SCCM, MicroFocus MFSM/NNMi/ArcSight ESM products are already supported. Event Integration runs as a service or daemon and is easy to troubleshoot from it's logs. Individual events can be traced to the corresponding incidents using a correlation id or system id. Numerous high-profile clients across the globe are already benefitting from the seamless automation achieved by Event Integration. No major bug fixes have been asked for since the past over 20 years that it has been serving customers. It is a very stable product. We welcome enhancement requests. Recall the water hauling bucket by bucket analogy I gave earlier. You can now cut the manual monotonous labor in your IT operations effectively. If you have an Event Management system and an Incident Management system that you would like to integrate or if you have unwieldy application logs that you want to automate deciphering to act upon, let’s connect. Contact us today. [table id=10 /]

[post_title] => The Role of Event Integration in IT Service Management

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => the-role-of-event-integration-in-it-service-management

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-05 18:45:22

[post_modified_gmt] => 2025-06-05 18:45:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5032

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [3] => WP_Post Object

(

[ID] => 5109

[post_author] => 15

[post_date] => 2025-06-09 01:05:26

[post_date_gmt] => 2025-06-09 01:05:26

[post_content] => Our client was struggling with the complexities of securing, managing, and optimizing its AWS environment. As part of their modernization efforts, they wanted to adopt DevOps methodologies, including infrastructure as code and native AWS security features. In addition, they needed to migrate their containerized applications from on-premises systems to AWS Elastic Kubernetes Service (EKS), adding another layer of complexity.

[post_title] => Case Study: AWS Cloud Migration and DevOps

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => case-study-aws-cloud-migration-and-devops

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 15:53:22

[post_modified_gmt] => 2025-06-09 15:53:22

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://keyvatech.com/?p=5109

[menu_order] => 0

[post_type] => post

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

) [4] => WP_Post Object

(

[ID] => 5099

[post_author] => 15

[post_date] => 2025-06-04 13:37:35

[post_date_gmt] => 2025-06-04 13:37:35

[post_content] => Monitoring has long been a staple of the IT toolset. But as IT leaders become more focused on delivering measurable business value, the limitations of simple monitoring are becoming more apparent. Traditional monitoring is reactive by nature and misses the nuances that can provide proactive indications that the environment is headed toward a problem. Alerts from one system don’t always indicate a root cause lurking in another system, oftentimes leading to a Whack-A-Mole approach where a fix to one system causes issues in another. This can lead to an environment where teams focus more working to absolve their domain as the source of an issue, rather than a quick mean time to repair (MTTR). The reality is simple: You can’t fix what you don’t see. And you certainly can’t improve it. Observability takes simple monitoring to a new level where issues are triangulated among systems to show the true chain of cause and effect throughout an IT stack. Done right, observability can surface leading indicators of risk, letting IT teams proactively address issues before they threaten to impact the business. It helps with correlation of events and causes of those events. Observability helps you see the big picture, understand what's really going on across your systems, and get ahead of potential problems to ensure IT continues to deliver its intended business value. Unified observability helps IT teams reduce risk, make informed decisions faster, and helps the business stay competitive. A highly mature observability program can even be a competitive advantage. Observability goes beyond toolsets and encompasses a new way of thinking about how to integrate and use the monitoring tools you already have. The shift is more about strategy and less about tools and technologies.Observability vs. Visibility

Observability is the process of gathering, aggregating, normalizing, and presenting data from across your IT monitoring tools to create comprehensive visibility across systems — including data from APM tools, logs, traces, EDR, SIEMs, and more — to make sense of it. Observability gives you the power to see across domains, functions, and systems. Visibility is the outcome you get when observability is done well. It’s the ability to take a deeper look into your stack and understand what’s happening in a meaningful way, whether you’re in infrastructure, security, application development, or operations. In this way, observability is a new way of collaborating across silos and creates a common source of truth for all IT stakeholders.The Cost of Operating Without Observability

Without comprehensive observability, teams will continue to invest valuable time in reacting to problems inside their silos, dashboards, and their interpretation of root causes. Operating without observability is a missed opportunity. You can’t see the signals that tell you a problem is coming. You can’t automate a response. You can’t innovate. You’re stuck spending resources just to keep the lights on. In today’s business environment, keeping the lights on isn’t enough because the competition is making progress where you aren’t. From a security perspective, the risk is even greater. If you're relying solely on signature-based detection, you may be blind to real threats and miss the ability to identify anomalous behavior across multiple vectors.The Power of a Unified Approach

Observability enables teams to identify potential risks to the business, including things like system stability or data loss. It provides factual insights to help IT leaders make more informed, data-driven decisions, helps IT teams pivot from reactive to proactive, and ensures that IT delivers its intended value. It allows you to correlate symptoms with root causes, taking the guesswork out of troubleshooting. Observability starts with the data you’re already generating then centralizing and normalizing it for analysis, which leads to a unified view of your environment. With enough data, you can get better context for what’s happening and gain the ability to spot leading indicators of risk. This gives you time to act before an issue begins to impact the business. The ability to personalize insights for each stakeholder is what makes observability valuable for the entire organization. The ability to draw from a single source of truth about IT operations means you can deliver consistent insights for each role. That’s important because observability data needs to be presented in a way that’s easily consumable by key IT stakeholders, each of whom needs specific insights about specific things. For example:- Executives who need visibility into risk, ROI, and alignment with business goals

- Infrastructure teams who focus on system health, availability, and scalability

- Application development leaders who want performance data, user insights, and fast feedback loops

- IT operations teams who care about day-to-day reliability and responsiveness

- Security teams that rely on cross-domain visibility for threat detection and incident response

- Faster root cause analysis

- Automated remediation of common issues

- Smarter change management

- Better alignment with business objectives

Tangible Business Benefits

Observability helps the business understand how IT is driving business value. It links IT health directly to outcomes like revenue protection, risk reduction, and strategic agility. Quantitative benefits of observability can include:- Reduced MTTR: Teams resolve issues faster because they can pinpoint the real cause

- Increased uptime: Systems are more stable, available, and predictable

- Optimized costs: Better data leads to better infrastructure sizing, fewer manual tasks, and more automation

- Shorter change windows: You have the confidence to move faster and by leveraging asset relationship views, make informed decisions that reduce application downtimes

- Better work life for IT teams: Teams spend less time troubleshooting and more time innovating

- Improved customer satisfaction: Fewer disruptions and faster fixes make happy users

- Stronger security posture: More complete insight leads to faster, smarter threat detection and response

- Reduced risk profile: By tying business processes back to the IT processes that support them, you better understand vulnerabilities, availability, and reliability.

Measuring Success with Observability

Success with observability results in your IT organization spending less time reacting and more time delivering value. One of the simplest ways to measure success is to look at two metrics:- Increased uptime and availability

- Decreased MTTR

- Reduced incident volume over time

- Shorter change windows

- Better customer experience scores

- Faster security response times

How Evolving Solutions and Keyva Can Help

Whether you're just beginning your observability journey or trying to scale a mature practice, Evolving Solutions and Keyva can help clients create an effective observability approach that can drive tangible business outcomes. We focus on delivering real business outcomes, not just dashboards. We meet clients where they are to help them define a strategy, select the right tools, or more fully utilize tools they already have to maximize their existing investments. We also have the talent to help you choose the right technology for your organization, structure a roadmap, and co-create a solution. With a broad view across clients and industries, we see what works and what doesn’t, which helps you avoid common pitfalls. Contact us today to get started. [table id=14 /] [post_title] => Beyond Monitoring: The Case for Unified IT Observability [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => beyond-monitoring-the-case-for-unified-it-observability [to_ping] => [pinged] => [post_modified] => 2025-06-04 13:37:35 [post_modified_gmt] => 2025-06-04 13:37:35 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=5099 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [5] => WP_Post Object ( [ID] => 5076 [post_author] => 7 [post_date] => 2025-05-21 16:53:27 [post_date_gmt] => 2025-05-21 16:53:27 [post_content] => Read about a client who faced a significant challenge in securing their AWS environment, which exposed the organization to potential service disruptions, security breaches, data exposure, and regulatory non-compliance. Download now. [post_title] => Case Study: AWS Security for Business Resilience [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => case-study-aws-security-for-business-resilience [to_ping] => [pinged] => [post_modified] => 2025-05-21 16:53:27 [post_modified_gmt] => 2025-05-21 16:53:27 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=5076 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [6] => WP_Post Object ( [ID] => 4985 [post_author] => 15 [post_date] => 2025-05-14 16:22:39 [post_date_gmt] => 2025-05-14 16:22:39 [post_content] => Kubernetes powers modern cloud-native infrastructure, but its networking layer often demands more than basic tools can deliver—enter Cilium, an eBPF-powered Container Network Interface (CNI). This blog explores Cilium’s features, a practical use case with a diagram, step-by-step installation and configuration, and a comparison with Istio. Whether you’re securing workloads or optimizing performance, Cilium’s got something to offer—let’s dive in!What is Cilium CNI?

Cilium is an open-source CNI plugin that leverages eBPF (extended Berkeley Packet Filter) to enhance Kubernetes networking. Running custom programs in the Linux kernel, Cilium outpaces traditional CNIs like Flannel or Calico by offering advanced security, observability, and Layer 3-7 control—all without modifying your apps.Cilium Capability Services Diagram:

[caption id="attachment_4987" align="alignleft" width="436"] Source: https://github.com/cilium/cilium/blob/main/Documentation/images/cilium-overview.png[/caption]

Source: https://github.com/cilium/cilium/blob/main/Documentation/images/cilium-overview.png[/caption] Key Features of Cilium

- eBPF Performance: Kernel-level packet handling for speed and efficiency.

- Identity-Based Security: Policies tied to Kubernetes labels, not fleeting IPs.

- Layer 7 Enforcement: Controls application traffic (e.g., HTTP, gRPC).

- Transparent Encryption: Secures communication with IPsec or WireGuard.

- Hubble Observability: Real-time network insights.

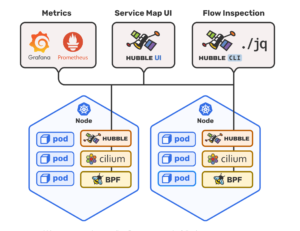

What is Hubble?

Hubble is a fully distributed networking and security observability platform for cloud native workloads. It is built on top of Cilium and eBPF to enable deep visibility into the communication and behavior of services as well as the networking infrastructure in a completely transparent manner. Hubble helps teams understand service dependencies and communication maps, operational monitoring and alerting, application monitoring, and security observability. [caption id="attachment_4990" align="alignnone" width="417"] Source: https://github.com/cilium/hubble/blob/main/Documentation/images/hubble_arch.png[/caption]

Source: https://github.com/cilium/hubble/blob/main/Documentation/images/hubble_arch.png[/caption] Why Cilium?

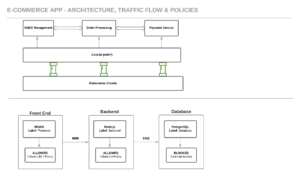

Traditional CNIs hit limits with scale—iptables slow down, and IP-based rules struggle with dynamic pods. Cilium’s eBPF approach and Layer 7 support, paired with Hubble’s visibility, address these gaps. But how does it compare to Istio? We’ll get there—first, a use case.Use Case: Securing an E-Commerce Microservices App

Imagine managing a Kubernetes cluster for an e-commerce platform with user management, order processing, and payment services implemented using 3 tier web architecture:- Frontend: NGINX web server for customer requests.

- Backend: Node.js API for user management, order processing & payment services.

- Database: PostgreSQL for persisting sensitive data.

Requirements

- Secure communication between microservices, ensuring that only authorized services can communicate with each other

- Granular control over HTTP traffic, allowing specific endpoints to be exposed or blocked

- Restrict frontend-to-backend traffic to port 8080.

- Limit backend-to-database traffic to port 5432.

- Block external database access.

- Real-time visibility into network traffic for troubleshooting and security monitoring.

Use Case Diagram

- Arrows (----->): Allowed traffic paths with ports.

- Labels: Used for Cilium’s identity-based policies.

- Blocked: External access denied by default.

Installing Cilium

Let’s install Cilium on your Kubernetes cluster. This section walks through prerequisites, setup, and validation for a smooth deployment.Prerequisites

- Kubernetes Cluster: Running 1.30+ (e.g., Minikube, Kind, or a cloud provider like EKS/GKE).

- Helm: Version 3.x installed (helm version to check).

- kubectl: Configured to access your cluster.

- Linux Kernel: 4.9+ with eBPF support (most modern distros qualify—check with uname -r).

Step 1: Prepare Your Cluster

Ensure your cluster is ready:kubectl get nodesStep 2: Add Cilium Helm Repository Add and update the Helm repo:

helm repo add cilium https://helm.cilium.io/ helm repo updateStep 3: Install Cilium with Hubble Deploy Cilium with observability enabled:

helm install cilium cilium/cilium --version 1.14.0 \ --namespace kube-system \ --set kubeProxyReplacement=disabled \ --set hubble.enabled=true \ --set hubble.relay.enabled=true \ --set hubble.ui.enabled=true

- --set kubeProxyReplacement=disabled: Keeps kube-proxy (set to true for full replacement if desired).

- --set hubble.*: Enables Hubble and its UI for monitoring.

kubectl get pods -n kube-system -l k8s-app=ciliumExpect output like:

NAME READY STATUS RESTARTS AGE cilium-abc123 1/1 Running 0 5m cilium-xyz789 1/1 Running 0 5mAlso verify Hubble:

kubectl get pods -n kube-system -l k8s-app=hubble-relayStep 5: Test Connectivity Deploy a simple pod and test networking:

kubectl run test-pod --image=nginx --restart=Never kubectl exec -it test-pod -- curl <another-pod-ip>Cilium should route traffic seamlessly.

Configuring Cilium for the Use Case

Now, configure Cilium for our e-commerce app.Step 1: Label Pods

Add labels to your deployments:# Frontend (snippet) metadata: labels: app: frontend # Backend metadata: labels: app: backend # Database metadata: labels: app: databaseApply: kubectl apply -f <file>.yaml.

Step 2: Apply Network Policies

Define CiliumNetworkPolicy resources:Policy 1: Frontend-to-Backend

apiVersion: cilium.io/v2 kind: CiliumNetworkPolicy metadata: name: frontend-to-backend spec: endpointSelector: matchLabels: app: backend ingress: - fromEndpoints: - matchLabels: app: frontend toPorts: - ports: - port: "8080" protocol: TCPPolicy 2: Backend-to-Database

apiVersion: cilium.io/v2 kind: CiliumNetworkPolicy metadata: name: backend-to-db spec: endpointSelector: matchLabels: app: database ingress: - fromEndpoints: - matchLabels: app: backend toPorts: - ports: - port: "5432" protocol: TCPApply: kubectl apply -f <file>.yaml. Step 3: Enable Hubble Start Hubble:

cilium hubble port-forward &Monitor:

hubble observe --selector app=backendOutput:

Feb 22 2025 14:00:01 frontend-xyz -> backend-abc ALLOWED (TCP 8080) Feb 22 2025 14:00:02 backend-abc -> database-def ALLOWED (TCP 5432) Feb 22 2025 14:00:03 external-ip -> database-def DROPPEDStep 4: Optional Layer 7 Policy

Restrict to GET requests: apiVersion: cilium.io/v2 kind: CiliumNetworkPolicy metadata: name: frontend-to-backend-l7 spec: endpointSelector: matchLabels: app: backend ingress: - fromEndpoints: - matchLabels: app: frontend toPorts: - ports: - port: "8080" protocol: TCP rules: http: - method: "GET" path: "/api/.*"Apply: kubectl apply -f frontend-to-backend-l7.yaml.

Istio vs. Cilium: A Comparison

Cilium and Istio both enhance Kubernetes networking, but they differ in scope and approach.Overview

- Cilium: CNI for Layer 3-7 networking and security via eBPF.

- Istio: Service mesh for application-layer traffic management via sidecar proxies (Envoy).

Key Differences

| Aspect | Cilium | Istio |

| Layer | Kernel (eBPF, L3-L7) | Application (sidecar, L7 focus) |

| Primary Role | Networking + security | Traffic management + observability |

| Performance | High (no proxies) | Sidecar overhead |

| Security | Identity policies, encryption | mTLS, L7 rules |

| Observability | Hubble (flow logs) | Metrics, traces (Prometheus, grafana) |

| Use Case Fit | Cluster-wide networking | Microservices communication |

Strengths

- Cilium: Lightweight, broad networking scope, deep packet visibility.

- Istio: Advanced L7 features (e.g., retries, canaries), multi-cluster support.

When to Choose?

- Cilium: Ideal for our use case—securing and monitoring cluster traffic efficiently.

- Istio: Better for microservices needing traffic splitting or tracing and complex service-to-service communication patterns.

- Both: Use Cilium for networking, Istio for service mesh features.

The Result

Our e-commerce app gains:- Security: Locked-down traffic, external threats blocked.

- Visibility: Hubble logs for fast debugging.

- Scalability: eBPF performance at scale.

Conclusion

Cilium transforms Kubernetes networking with eBPF, offering a potent mix of security, speed, and insight. Its installation is straightforward, and it shines in use cases like ours—while holding its own against Istio. Try it out and reach Keyva for any professional services support! [table id=13 /] [post_title] => Cilium CNI & Hubble: A Deep Dive with Installation, Configuration, Use Case Scenario and Istio Comparison [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => cilium-cni-hubble-a-deep-dive-with-installation-configuration-use-case-scenario-and-istio-comparison [to_ping] => [pinged] => [post_modified] => 2025-05-21 16:55:42 [post_modified_gmt] => 2025-05-21 16:55:42 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=4985 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [7] => WP_Post Object ( [ID] => 4981 [post_author] => 7 [post_date] => 2025-04-29 15:07:41 [post_date_gmt] => 2025-04-29 15:07:41 [post_content] => Many things are usually easy when you first start out. For example, purchasing a new home is exciting and straightforward at first, but over time, maintenance issues arise, insurance costs and property taxes increase, and neighborhood dynamics change. Suddenly that dream home starts keeping you up at night. It is the same with the cloud.Beyond the Cloud’s Silver Lining

Cloud adoption is easy at the beginning too. Cloud providers typically offer straightforward onboarding processes for small-scale migrations using an easy-to-use GUI interface. The pay-as-you-go model seems like a great deal compared to the cost of depreciating datacenter assets, forklift upgrades and software maintenance. Cloud offers a perfect alternative to fixed depreciating assets, especially when organizations have a need for increased workloads based on seasonal business trends. However, the more services and applications you migrate to the cloud, it becomes increasingly important to manage these assets in a standardized and streamlined manner.- Cloud costs can become increasingly difficult to manage as more services across multiple clouds are expanded, given that each cloud provider has a different pricing model, as well as costs can escalate rapidly due to factors like data transfer, storage, and unexpected usage spikes.

- With increased cloud adoption, there is a tendency to overlook wasted resources. Once resources are provisioned manually, their continuous existence accrues on the monthly bill; so increased discipline is needed to keep the resource utilization to only what is needed.

- Underutilization of resources is one of the biggest challenges in the Cloud. There is a tendency to overprovision the sizing of resources because they are available, and this causes wasteful spending for organizations.

Why aren’t Companies Achieving Cost Optimization?

If the cloud was supposed to be simpler than on-prem, then why does cloud optimization seem elusive to some organizations? A primary reason is the pace at which cloud transformation has taken place; the priority has often been “move to the cloud first and worry about optimization later”. It is an easy trap to get into. At Keyva, we've seen companies spend substantial amounts on cloud migrations, sometimes up to a million dollars per month. Imagine if you could save just 2% of that expenditure. That translates into real savings that could be reinvested in other business initiatives. Another reason why optimization isn’t achieved for a while is that like anything, the cloud has a learning curve, and some of those lessons can prove quite costly. Consulting with third-party experts who understand common pitfalls and mistakes can help you bypass the trial-and-error phase. These experts can guide you through the optimization process, ensuring you avoid the typical gotchas and costly errors and achieve cost efficiency and ROI sooner.Achieving Operational Hygiene

We often hear about security hygiene. At Keyva, we also emphasize operational hygiene. That topic includes a lot of things, and one of the big ones is right-sizing. Overprovisioning is the #1 culprit of cloud overspending and it’s surprisingly easy to do. When choosing options in the cloud menu, the largest ones may be chosen for you by default, or you may be driven by normal human tendency to select a larger option. An organization should have guardrails to ensure a structured process for choosing the right amount and right-sized resource bundles. This can be done in several ways – by establishing a committee that must approve larger or expensive options to prevent unnecessary expenditures, or by using infrastructure as code methodologies. By incorporating chargeback and showback features which enhance cost visibility and accountability, it can be helpful to see the breakdown for features and resources used by various teams within the organization. Here’s how they work:- Showback provides detailed reports on cloud resource usage and associated costs without directly billing departments. It can serve as an educational tool to enhance cost awareness and help teams understand their cloud spending patterns and identify areas for cost optimization.

- Chargeback involves directly billing departments or teams for their cloud resource usage to make them transparent. Tying costs to actual usage prevents wasteful spending and aligns cloud costs with departmental budgets. This approach promotes financial accountability and encourages teams to optimize their resource allocation.

Some Steps are Easy

Cloud optimization doesn't have to be complicated. It can be as simple as turning off resources after use. Just as you learned to turn off lights when leaving a room to save on the electric bill, you should deprovision resources when they're not needed. The sooner guardrails are set up to manage cloud costs, the better it is for adoption. While provisioning cloud native VMs or services in response to demand spikes is typically prioritized, turning them off after intended use or reducing the size and scale of utilized clusters should receive equal attention. All of this can be automated using infrastructure as code pipelines as well. License bundling is another simple but effective strategy for optimizing cloud costs as it helps streamline expenses, enhances flexibility and reduces administrative overhead. Much like cloud computing, where shared resources maximize efficiency, bundled licensing prevents service redundancies and minimizes underutilized cloud instances. By consolidating licenses, organizations ensure they are only paying for what they actually need, leading to greater cost efficiency and a more optimized cloud environment.The 15-point Approach

At Keyva, we've developed a comprehensive 15-point strategy to achieve cloud optimization. When working with clients, we utilize monitoring to analyze key metrics such as average utilization, volume requests, and peak time utilization. This helps determine the optimal sourcing of resources. Monitoring is only valuable if it leads to actionable insights. At Keyva, we work with all the major cloud vendors, and use Well Architected Framework (WAF) model as a guide to achieving efficiency in systems and capacity. Our certified staff has worked with all major hyperscalers and cloud providers, and implemented WAF framework for multiple clients across various industry verticals. Cloud optimization is about finding real savings that can show an ROI quickly. Find out more about our 15-point strategy by contacting us today. [table id=3 /] [post_title] => Cloud Optimization: Act Now or Pay Later [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => cloud-optimization-act-now-or-pay-later [to_ping] => [pinged] => [post_modified] => 2025-05-05 19:59:54 [post_modified_gmt] => 2025-05-05 19:59:54 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=4981 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) ) [post_count] => 8 [current_post] => -1 [before_loop] => 1 [in_the_loop] => [post] => WP_Post Object ( [ID] => 5123 [post_author] => 7 [post_date] => 2025-07-08 16:21:14 [post_date_gmt] => 2025-07-08 16:21:14 [post_content] => Keyva is pleased to announce the certification of the Keyva BMC Atrium Data Pump and the Keyva HP uCMDB Data Pump for the new ServiceNow Yokohama release. Clients can now seamlessly upgrade their ServiceNow App from previous ServiceNow releases (Xanadu, Vancouver) to the Yokohama release. The ServiceNow Yokohama release delivers enhanced AI-driven workflows, improved user experiences, and expanded automation capabilities to increase productivity, resilience, and service efficiency across the enterprise. Keyva’s BMC Atrium™ CMDB Data Pump provides synchronization of the CIs, CI attributes and relationships between the ServiceNow CMDB and the BMC Atrium™ CMDB systems. Keyva’s HP Universal CMDB Data Pump provides synchronization of the CIs, CI attributes and relationships between the ServiceNow CMDB and the HP Universal CMDB systems. Both integrations allow organizations to leverage their existing investment in Enterprise Software and avoid costly "Rip and Replace" projects. Learn more about the Keyva ServiceNow Integrations Hub for CMDB products and view all the ServiceNow releases for which Keyva has been certified at the ServiceNow store, visit http://bit.ly/4lnWjBU. [post_title] => Keyva ServiceNow Integrations for CMDB Data Pump Certified for Yokohama Release [post_excerpt] => [post_status] => publish [comment_status] => closed [ping_status] => closed [post_password] => [post_name] => keyva-servicenow-integrations-for-cmdb-data-pump-certified-for-yokohama-release [to_ping] => [pinged] => [post_modified] => 2025-07-09 12:42:51 [post_modified_gmt] => 2025-07-09 12:42:51 [post_content_filtered] => [post_parent] => 0 [guid] => https://keyvatech.com/?p=5123 [menu_order] => 0 [post_type] => post [post_mime_type] => [comment_count] => 0 [filter] => raw ) [comment_count] => 0 [current_comment] => -1 [found_posts] => 134 [max_num_pages] => 17 [max_num_comment_pages] => 0 [is_single] => [is_preview] => [is_page] => [is_archive] => [is_date] => [is_year] => [is_month] => [is_day] => [is_time] => [is_author] => [is_category] => [is_tag] => [is_tax] => [is_search] => [is_feed] => [is_comment_feed] => [is_trackback] => [is_home] => 1 [is_privacy_policy] => [is_404] => [is_embed] => [is_paged] => [is_admin] => [is_attachment] => [is_singular] => [is_robots] => [is_favicon] => [is_posts_page] => [is_post_type_archive] => [query_vars_hash:WP_Query:private] => c24e3921e4eace05e49e21115b773f96 [query_vars_changed:WP_Query:private] => [thumbnails_cached] => [allow_query_attachment_by_filename:protected] => [stopwords:WP_Query:private] => [compat_fields:WP_Query:private] => Array ( [0] => query_vars_hash [1] => query_vars_changed ) [compat_methods:WP_Query:private] => Array ( [0] => init_query_flags [1] => parse_tax_query ) [query_cache_key:WP_Query:private] => wp_query:290f1d99617a9f5a5c706499315b5012:0.37705300 1752232824 [tribe_is_event] => [tribe_is_multi_posttype] => [tribe_is_event_category] => [tribe_is_event_venue] => [tribe_is_event_organizer] => [tribe_is_event_query] => [tribe_is_past] => )Create Seamless Integrations to Support Single Pane-of-glass Visibility

Manage Your Entire IT Infrastructure with One Service Model. Download now.

Keyva ServiceNow Integrations for CMDB Data Pump Certified for Yokohama Release

Keyva is pleased to announce the certification of the Keyva BMC Atrium Data Pump and the Keyva HP uCMDB Data Pump for the new ServiceNow Yokohama release. Clients can now ...

How Keyva Bridges the Divide to Unite ITIL and Agile for Success

In today’s fast-paced digital landscape, organizations face a delicate balancing act that many organizations must contend with when it comes to IT service management. It is a dynamic tug-of-war between ...

The Role of Event Integration in IT Service Management

Imagine having to haul water bucket by bucket into your home from the river, for utilitarian purposes. You get up in the morning, pick the bucket, walk all the way ...

Case Study: AWS Cloud Migration and DevOps

Our client was struggling with the complexities of securing, managing, and optimizing its AWS environment. As part of their modernization efforts, they wanted to adopt DevOps methodologies, including infrastructure as ...

Beyond Monitoring: The Case for Unified IT Observability

Monitoring has long been a staple of the IT toolset. But as IT leaders become more focused on delivering measurable business value, the limitations of simple monitoring are becoming more ...

Case Study: AWS Security for Business Resilience

Read about a client who faced a significant challenge in securing their AWS environment, which exposed the organization to potential service disruptions, security breaches, data exposure, and regulatory non-compliance. Download ...

Cilium CNI & Hubble: A Deep Dive with Installation, Configuration, Use Case Scenario and Istio Comparison

Kubernetes powers modern cloud-native infrastructure, but its networking layer often demands more than basic tools can deliver—enter Cilium, an eBPF-powered Container Network Interface (CNI). This blog explores Cilium’s features, a ...

Cloud Optimization: Act Now or Pay Later

Many things are usually easy when you first start out. For example, purchasing a new home is exciting and straightforward at first, but over time, maintenance issues arise, insurance costs ...